Introduction: Why a Test Strategy Matters

Are you finding critical bugs slipping into production despite your team’s best efforts? Does your testing often feel chaotic, struggling to keep pace with rapid Agile sprints? In the fast-evolving landscape of modern software development, Agile methodologies like Scrum and Kanban have revolutionized how teams build and deliver products. They champion collaboration, continuous feedback, and iterative releases, accelerating delivery and improving responsiveness. However, this velocity introduces unique challenges, especially when it comes to maintaining impeccable quality.

Without a well-defined Agile Test Strategy, testing can easily become a bottleneck or, worse, a chaotic afterthought. Critical bugs might evade detection, new features could inadvertently break existing functionality, and the overall stability and user experience of your product can suffer. This is precisely why a Test Strategy isn’t merely beneficial in Agile; it’s absolutely essential.

A Test Strategy serves as a high-level blueprint, articulating the scope, objectives, approach, and central focus of all testing activities. It brings vital clarity to the entire team, answering fundamental questions like:

- What needs to be tested? (e.g., new features, critical workflows, security aspects)

- How will testing be conducted? (e.g., manual, automated, exploratory testing)

- When should testing occur within the Agile development lifecycle?

- Who is responsible for which testing activities?

- Which tools, environments, and frameworks will support our quality assurance efforts?

More than just a document, a Test Strategy acts as a shared vision, aligning developers, testers, product owners, and other stakeholders. It ensures that quality remains an inherent part of the Agile process, not just a final gate. By providing structure, reducing ambiguity, and fostering accountability, it empowers teams to consistently deliver reliable, high-performing software, even under tight deadlines and evolving requirements.

In this comprehensive guide, we’ll dive deep into everything you need to know to establish a robust and scalable Test Strategy for Agile teams, including:

- A clear, practical definition of what a Test Strategy entails, with real-world examples.

- Why its importance is amplified in Agile development.

- The essential components every effective Test Strategy should encompass.

- A pragmatic, step-by-step approach to crafting your own strategy.

- Common pitfalls teams encounter and actionable strategies to overcome them.

- Best practices to continuously refine and evolve your strategy over time.

By the conclusion of this blog, you will possess a profound understanding of how to implement a Test Strategy that seamlessly integrates with your Agile process, amplifies team collaboration, and guarantees consistent, high-quality product delivery.

- Introduction: Why a Test Strategy Matters

- What is a Test Strategy?

- Why is a Test Strategy Important in Agile?

- Key Components of a Scalable Agile Test Strategy

- Testing Objectives: What Are We Trying to Achieve?

- Test Scope: What Will We Test (and What Not)?

- Test Levels & Types: What Types of Testing Will We Do?

- Roles and Responsibilities: Who Will Do What?

- Test Environment Management: Where Will We Test?

- Tools and Automation: What Tools Will We Use?

- Defect Management: How Will We Handle Bugs?

- Quality is Everyone’s Responsibility

- Steps to Build a Robust Test Strategy for Agile Teams

- Understand the Product, Vision, and Users:

- Collaborate Extensively with the Team:

- Identify What and How You’ll Test (Test Types and Coverage):

- Define What “Done” Means for Testing (Definition of Done – DoD):

- Choose Tools and Set Up Your Testing Framework:

- Keep Improving (Continuously Improve and Evolve):

- Best Practices for Agile Testing

- Metrics and Reporting for Quality Assurance

- Common Challenges in Agile Testing (and How to Overcome Them)

- Conclusion: Keep It Simple, Flexible, and Evolving

What is a Test Strategy?

A Test Strategy is a comprehensive, high-level document or framework that outlines how quality assurance will be approached throughout a software development project or product lifecycle. It’s distinct from a Test Plan, which is typically more detailed and specific to a particular release or sprint. Think of the Test Strategy as your unchanging navigation map for the entire journey, while a Test Plan is the detailed daily itinerary for each leg of the trip.

It helps the entire team, from developers to product owners, understand the overall testing philosophy and ensures that the final product meets its quality benchmarks before it’s released to users.

A Test Strategy Answers These Critical Questions:

What will we test?

This defines the scope of testing. It details which parts of the software (e.g., specific features, modules, user flows) will be subjected to quality checks. This includes both functional testing (verifying what the product does) and non-functional testing (assessing how well it performs, its security, usability, etc.).

Example:

“We will focus on testing the new user registration flow, payment gateway integration, and existing profile management features. Performance under peak load will also be assessed.”

How will we test it?

This outlines the methodologies and types of testing to be employed. It specifies the balance between manual and automated testing, the tools to be used, and the approach to test data management and environment setup.

Example:

“New feature testing will involve a combination of manual and exploratory testing. Regression testing will be fully automated using Playwright. Test data will be generated via a dedicated faker service.”

When and who will test it?

This addresses the timing and responsibilities. It indicates when different testing phases will occur within the Agile sprint cycle and identifies the key individuals or roles involved in various testing activities.

Example:

“Unit tests will be performed by developers during coding. QA engineers will conduct integration and system testing throughout the sprint. User Acceptance Testing (UAT) will be done by the Product Owner and key stakeholders in the sprint’s final days.”

Beyond these core questions, a robust Test Strategy also typically includes:

- Test Goals: Clear, measurable objectives that the testing efforts aim to achieve (e.g., “Achieve 95% automation coverage for critical user flows,” “Reduce production defects by 20%”).

- Test Deliverables: What documents or artifacts will be created (e.g., test cases, bug reports, test summaries, automation scripts).

- Risks and Contingencies: Identification of potential testing-related risks (e.g., environment instability, lack of skilled resources) and strategies to mitigate them.

- Defect Management Process: A defined workflow for reporting, tracking, prioritizing, and resolving bugs.

- Communication Plan: How testing progress, issues, and reports will be communicated to the team and stakeholders.

- Standards and Guidelines: Any specific quality standards, regulatory compliance (e.g., GDPR), or architectural guidelines that testing must adhere to.

Why is a Test Strategy Important in Agile?

Agile development thrives on speed, flexibility, and continuous delivery. New features and updates are released frequently, sometimes every two to three weeks. In such a high-velocity environment, testing must be continuous, integrated, and strategically planned. This is precisely where a well-crafted Agile Test Strategy becomes indispensable.

What Happens If There’s No Clear Test Strategy in Agile?

The absence of a robust test strategy in an Agile context can lead to significant problems:

Quality May Plummet: Without a predefined plan for what, how, and when to test, critical features or edge cases might be overlooked entirely. This directly results in more bugs and errors slipping into production, severely impacting user experience and damaging brand reputation.

Example: A financial app releases a new payment feature without a strategy for performance testing. Under peak load, the app crashes, leading to lost transactions and user frustration.

Bugs Are Found Too Late (Costly Rework): Agile’s essence is early feedback. If testing is disorganized or an afterthought, defects might only surface during user acceptance testing (UAT) or, worse, after deployment to production. Fixing bugs late in the cycle is exponentially more expensive and time-consuming than catching them early during development.

Example: A critical security vulnerability is discovered only after the application is live, requiring an urgent, costly hotfix and potential data breach notifications.

Teams Become Uncoordinated and Inefficient: Agile mandates close collaboration among developers, testers, product owners, and other stakeholders. Without a unified test strategy, each team member might adopt a different testing approach, leading to confusion, duplicated efforts, missed handovers, and overall project delays. Quality becomes “someone else’s problem” rather than a shared responsibility.

Example: Developers write unit tests, but QAs don’t know the scope, leading to redundant UI tests for already covered functionality, wasting valuable sprint time.

An effective Test Strategy acts as the guardrail for quality in the fast lane of Agile, ensuring that speed never compromises the integrity of the software delivered.

Key Components of a Scalable Agile Test Strategy

A Test Strategy in Agile isn’t merely a static document; it’s a living blueprint that guides the entire team towards delivering a high-quality product. Let’s break down its essential parts:

Testing Objectives: What Are We Trying to Achieve?

Before initiating any testing activities, clarity on why we are testing is paramount. In an Agile context, the primary objectives of testing extend beyond just finding bugs:

- Find bugs early: Proactively identify and address defects as close to their introduction as possible, minimizing the cost and effort of rework.

- Maintain high quality (Regression Stability): Ensure that every new build is stable, performs well, and introduces no regressions to existing functionality. Each iteration should build upon a solid foundation.

- Support rapid, confident releases: Enable the team to release new features quickly and reliably, instilling confidence in the product’s stability.

- Mitigate business risks: Identify and address potential issues that could impact business operations, user satisfaction, or regulatory compliance.

- Verify requirements satisfaction: Confirm that the developed features meet the user stories and acceptance criteria defined by the product owner.

Example: “Our objective is to ensure that the user onboarding flow is flawless, achieving 99.9% uptime during peak usage, and that all new security features comply with OWASP Top 10 guidelines.”

Test Scope: What Will We Test (and What Not)?

In each sprint or release, it’s crucial to delineate what falls in scope and out of scope for testing. This helps the team focus efforts where they are most needed and avoid unnecessary testing.

- In Scope: New features, modified functionalities, high-risk modules, critical user paths, integrations with external systems, and areas with a history of defects.

- Out of Scope: Unchanged legacy modules with proven stability, very low-priority features with negligible impact, or third-party components beyond the team’s control (though their integration points would be in scope).

Example: “For Sprint 15, the new ‘Dark Mode’ feature and enhancements to the ‘Search’ functionality are in scope. The existing ‘Admin Dashboard’ and third-party analytics integrations (beyond data flow) are out of scope for this sprint’s focused testing.”

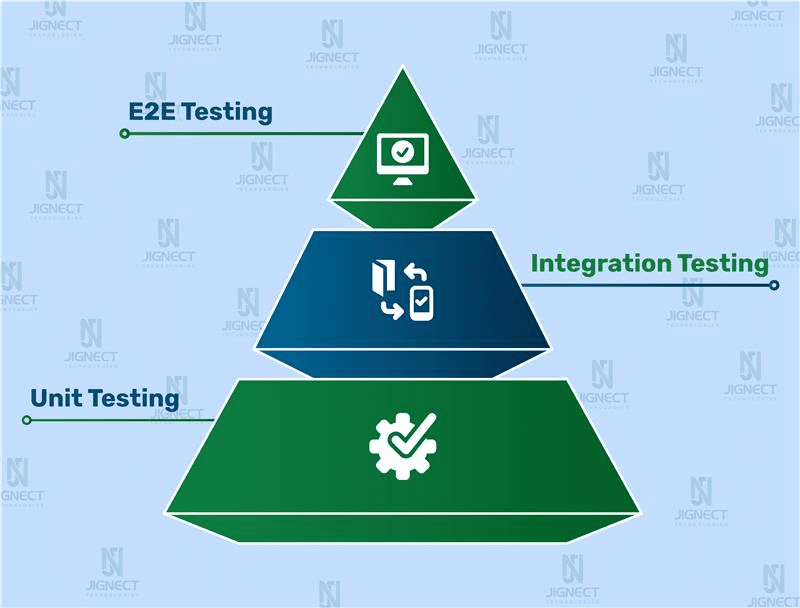

Test Levels & Types: What Types of Testing Will We Do?

Testing isn’t a single activity; it’s a layered approach, with each layer validating different aspects of the software. A scalable Agile Test Strategy incorporates a diverse set of testing types:

- Unit Testing:

Performed by developers on isolated code units (functions, methods) to ensure they work as intended.

Example: A developer tests acalculateTax()function to confirm it returns the correct tax amount for various inputs. - Integration Testing:

Verifies that different modules or services interact correctly when combined.

Example: Testing that the user authentication service correctly passes user data to the profile management service. - System Testing:

End-to-End testing of the complete, integrated application to ensure it meets specified requirements.

Example: Testing the entire user journey from signup, login, to making a purchase and receiving a confirmation. - Acceptance Testing (UAT):

Performed by Product Owners or actual end-users to validate that the software meets business requirements and user needs.

Example: A product owner uses the new e-commerce checkout flow to confirm it aligns with the user story and business expectations. - Regression Testing:

Crucial in Agile, ensuring that new changes or bug fixes do not negatively impact existing, previously working functionality.

Example: After adding a new product category, running automated tests to ensure existing payment methods still work correctly. - Exploratory Testing:

A simultaneous learning, test design, and test execution activity.

Example: A tester, playing the role of a frustrated user, tries to intentionally break the form input fields using special characters or excessive text. - Performance Testing:

Evaluating the system’s responsiveness, stability, scalability, and resource usage under various load conditions.

Example: Running load tests to see how the application performs with 1000 concurrent users or measures page load times under stress. - Security Testing:

Identifying vulnerabilities and weaknesses in the application that could lead to security breaches.

Example: Performing penetration testing or static code analysis to find potential SQL injection flaws or broken authentication mechanisms. - Usability Testing:

Assessing how easy and intuitive the application is for its intended users.

Example: Observing real users completing tasks on the application to identify confusing navigation or unclear error messages. - Accessibility Testing:

Ensuring the application is usable by people with disabilities (e.g., visual impairments, motor disabilities).

Example: Using screen readers or keyboard-only navigation to verify a website is compliant with WCAG guidelines.

Roles and Responsibilities: Who Will Do What?

In Agile, quality is a shared endeavor. While specific roles lead certain testing activities, everyone contributes to the overall quality of the product.

- QA Engineers/Testers: Design and execute test cases, perform exploratory testing, manage test data, report and re-test bugs, contribute to automation efforts, and provide quality insights.

- Developers: Write and maintain unit tests, perform integration testing, participate in code reviews, and fix bugs promptly.

- Product Owners/Business Analysts: Define clear user stories and acceptance criteria, participate in User Acceptance Testing (UAT), and prioritize defects based on business value.

- Scrum Master/Agile Coach: Facilitates team collaboration, removes impediments, and ensures quality remains a focus without becoming a bottleneck.

- Everyone: Actively participates in discussions about quality, provides feedback, and helps identify potential issues early.

Test Environment Management: Where Will We Test?

Reliable and consistent test environments are fundamental for accurate testing. An Agile Test Strategy outlines how these environments will be provisioned, maintained, and refreshed.

- Development (Dev) Environment: For developers to test individual code changes and unit integrations.

- QA Environment: A stable environment for comprehensive feature testing, integration testing, and regression cycles. This should ideally mirror production settings as closely as possible.

- User Acceptance Testing (UAT) Environment: A dedicated environment for product owners and stakeholders to conduct final validation before release.

- Production (Prod) Environment: The live environment where the application is deployed. Monitoring and post-release validation occur here.

Key Considerations:

- Test Data Management: Strategies for creating, managing, and refreshing realistic, anonymized test data (e.g., using Faker.js or Mockaroo).

- Mock Services/APIs: Utilizing mock services or API virtualization to test dependencies that are not yet available or stable.

- Environment Consistency: Ensuring environments are consistent across different testing stages and regularly refreshed or backed up to prevent stale data.

Example: “We will use Docker containers for local development environments, a dedicated AWS EC2 instance for QA, and a staging environment mirroring production for UAT. Test data for sensitive areas will be anonymized from production snapshots monthly.”

Tools and Automation: What Tools Will We Use?

Leveraging the right tools and embracing automation are critical for achieving speed and scalability in Agile testing.

Automation Tools:

- UI Automation: Selenium WebDriver, , Cypress, Playwright

- API Automation: Requests, REST Assured, Restsharp

- Mobile Automation: Appium, Webdriver I/O, CodeceptJS

Test Management Tools: JIRA (with plugins like Zephyr, Xray), TestRail, Azure DevOps

CI/CD Tools: Jenkins, Azure DevOps Pipelines, CircleCI

Performance Testing Tools: Apache JMeter, K6, LoadRunner

Security Testing Tools: Burp Suite, Pynt, Intruder static application security testing (SAST) tools.

Reporting and Analytics Tools: Dashboards (e.g., using Grafana) to visualize test results, automation trends, and quality metrics.

Example: “We will automate critical end-to-end user flows using Cypress, integrate these tests into our GitHub Actions pipeline, and manage all test cases and defects within Jira.”

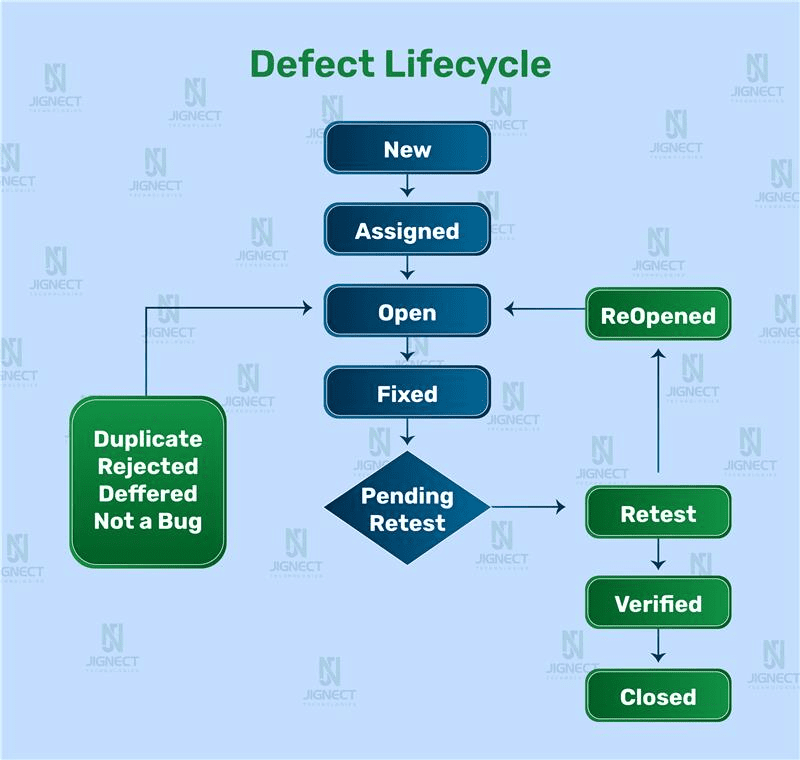

Defect Management: How Will We Handle Bugs?

A clear and efficient defect management process is vital to ensure that bugs are tracked, prioritized, and resolved without disrupting the Agile flow.

- Bug Tracking Tool: A centralized system like JIRA, Azure DevOps, or Bugzilla for logging and tracking defects.

- Bug Lifecycle: “New” → “Assigned” → “Open” → “Fixed” → “Pending Retest” → “Re-tested” → “Closed” or “Rejected”

- Priority and Severity Rules: Establish clear guidelines for assigning priority (e.g., P0: Blocker, P1: High, P2: Medium, P3: Low) and severity (e.g., Critical, Major, Minor, Cosmetic) to defects, enabling the team to focus on the most impactful issues.

- Example: “A payment gateway failure is a P0 Blocker, requiring immediate attention. A minor UI misalignment is a P3 Cosmetic, to be addressed in a future sprint.”

- Root Cause Analysis (RCA): For critical or frequently recurring defects, establish a process for conducting RCA to understand why the bug occurred and implement preventative measures.

- Communication: Regular communication on defect status during stand-ups and sprint reviews.

Example: “All defects will be logged in Jira with clear steps to reproduce, expected vs. actual results, and screenshots. High-priority bugs found in the QA environment will trigger a team notification for quick resolution.”

Quality is Everyone’s Responsibility

In an Agile environment, the traditional “QA as the sole gatekeeper of quality” mentality is outdated and detrimental. A truly scalable Agile Test Strategy champions the philosophy that quality is a collective responsibility shared by every member of the development team and stakeholders.

- Developers’ Role: Beyond writing code, developers are integral to quality. They are responsible for writing robust unit tests (e.g., using JUnit for Java, NUnit for .NET), performing thorough code reviews, and conducting early integration tests. They fix bugs identified in their code proactively.

- Product Owners’ Role: Product Owners contribute by providing clear, unambiguous user stories and acceptance criteria. Their participation in User Acceptance Testing (UAT) ensures the product meets genuine user needs and business objectives.

- Designers’ Role: Ensuring designs are technically feasible and provide an intuitive user experience, proactively identifying potential usability issues.

- Team Collaboration: Quality discussions should be a regular part of sprint planning, daily stand-ups, and retrospectives. When a bug is found, the focus shifts from “who broke it?” to “how can we fix it and prevent it from happening again?” This fosters a culture of shared ownership and continuous improvement.

- Example: During sprint planning, developers and QAs collaboratively review user stories, identifying potential edge cases for testing and agreeing on how to handle data validation, shifting quality considerations left from the very beginning.

Steps to Build a Robust Test Strategy for Agile Teams

Creating an effective Agile Test Strategy involves a series of collaborative and iterative steps. It’s not a one-time activity but an evolving process.

Understand the Product, Vision, and Users:

Dive into User Stories & Requirements:

Thoroughly read and comprehend user stories, epics, and any available functional/non-functional requirements. These are your primary source of understanding what needs to be built and, consequently, tested.

- Example: For a user story “As a customer, I want to be able to pay with a credit card,” understand the specific card types, security requirements, and error handling.

Grasp Business Goals & Value:

Understand why a feature is being built and its intended business impact. This helps prioritize testing efforts and ensures that tests align with overall product objectives.

Know Your Target Audience:

Who are the end-users? What are their technical capabilities? What devices do they use? Understanding the user base helps define relevant test scenarios, including accessibility needs.

Ask Probing Questions:

Engage actively with Product Owners, Business Analysts, and Developers. If something is unclear, ask for clarification.

- Tip for beginners: Never jump into testing until you have a crystal-clear understanding of the desired outcome and success criteria for the feature.

Collaborate Extensively with the Team:

Participate in All Agile Ceremonies:

Active involvement in sprint planning, daily stand-ups, backlog refinement, and retrospectives is paramount. This ensures testers are always aware of upcoming features, potential changes, and ongoing issues.

Shared Ownership Discussions:

Use these forums to discuss testing approaches, potential risks, and resource needs. Share your test ideas early in the sprint planning.

Continuous Feedback Loop:

Encourage a culture where developers consult with QAs early on implementation details, and QAs provide quick, actionable feedback on builds.

Teamwork makes testing smoother and faster. Don’t be afraid to speak up and contribute your quality perspective!

Identify What and How You’ll Test (Test Types and Coverage):

Risk-Based Testing:

Prioritize testing efforts based on the risk and impact of a feature. Complex, high-traffic, or business-critical modules warrant more extensive and varied testing.

Select Appropriate Test Types:

Determine the right blend of manual, automated, exploratory, performance, and security testing based on the feature’s nature, team’s capacity, and available tools.

Automate Regression Tests:

Systematically identify and automate critical regression test suites. These are vital for maintaining product stability with every new deployment.

Test Smart, Not Just Hard: Focus your energy on providing maximum quality assurance with efficient testing methods.

- Example: For a brand new feature, start with manual and exploratory testing. Once stable, automate its core flows and integrate them into the regression suite.

Define What “Done” Means for Testing (Definition of Done – DoD):

A universally agreed-upon Definition of Done (DoD) provides clear criteria for when a user story or task is considered “complete” from a testing perspective. This avoids ambiguity and ensures consistent quality.

Example DoD for Testing (Team-Agreed): – All high-priority (P0/P1) test cases passed for the feature. – No critical or major (Severity 1/2) bugs remain in the feature area. – Automated regression tests are green for the impacted modules. – Code coverage meets the defined threshold (e.g., 80% for unit tests). – Security and accessibility checks have been performed and passed. – The feature has been reviewed by the Product Owner and signed off.

Having clear, team-agreed criteria avoids confusion, prevents features from “slipping through the cracks,” and ensures accountability for quality.

Choose Tools and Set Up Your Testing Framework:

Select Lightweight, Agile-Friendly Tools:

Opt for tools that integrate well with your Agile workflow and don’t introduce unnecessary overhead. Prioritize those that support rapid feedback and collaboration.

Build Reusable Frameworks:

Instead of writing tests from scratch for every new feature, invest in building reusable test automation frameworks, libraries, or functions. This significantly speeds up test creation and maintenance.

Integrate with CI/CD:

Ensure your chosen tools and framework can be seamlessly integrated into your Continuous Integration/Continuous Delivery (CI/CD) pipeline.

Example: “We’ll use Cypress for end-to-end UI automation, with test data managed via a shared utility. Our automation tests will run automatically as part of our Azure DevOps CI pipeline.”

Remember, tools should empower your team, not become a burden or a bottleneck.

Keep Improving (Continuously Improve and Evolve):

A Scalable Test Strategy is not static. It’s a living document that adapts and improves over time, reflecting lessons learned and evolving product needs.

Utilize Retrospectives:

After each sprint, use the team retrospective to discuss what worked well in testing, what challenges were faced, and what could be improved.

Gather Feedback:

Actively solicit feedback from developers, product owners, and even end-users about the quality of releases and the effectiveness of testing efforts.

Regularly Review and Update:

Periodically review your entire Test Strategy (e.g., quarterly or after major releases) to ensure it remains relevant, addresses new challenges, and incorporates successful practices.

Agile is fundamentally about adaptation and continuous improvement. Your testing approach should embody this principle.

Best Practices for Agile Testing

In the fast-paced world of Agile, adopting smart testing practices is key to maintaining quality and accelerating delivery.

Shift-Left Testing – Start Early & Continuously

Description: This fundamental Agile principle advocates for moving testing activities earlier in the development lifecycle. Instead of testing being a phase after development, it’s integrated from the very beginning.

How to Do It: – Testers participate in backlog refinement, sprint planning, and daily stand-ups. – Ask clarifying questions about requirements, potential edge cases, and acceptance criteria before coding begins. – Start designing test scenarios and automation scripts as soon as user stories are defined, even before the feature is fully coded. – Incorporate Static Code Analysis (e.g., SonarQube) early to identify code quality issues and potential vulnerabilities.

Example: When a new “password reset” feature is being discussed, the QA engineer immediately raises questions about invalid email formats, account lockout policies, and security implications, influencing the design proactively.

Benefit: Reduces ambiguity, uncovers missing requirements, and prevents costly rework by catching issues at their cheapest point of fix.

Test Automation First (Strategically)

Description: Prioritize automating test cases that are repetitive, stable, frequently executed, and provide high return on investment (ROI). This frees up manual testers for more complex, exploratory, or critical testing.

What to Automate: – Smoke Tests: Basic health checks of the application’s core functionality. – Regression Tests: Ensuring existing features remain unbroken after new changes. – Common User Flows: Login, signup, search, payment processing, etc. – API Tests: Often more stable and faster than UI tests, providing excellent coverage for business logic.

Tools: – UI: Selenium, Playwright, Cypress –

API: Postman/Newman, REST Assured

Example: Automating the entire user registration and login flow ensures that every new code deployment immediately validates this critical entry point.

Benefit: Significantly reduces manual effort, ensures consistent test execution, provides rapid feedback, and improves overall test coverage over time.

Integrate Tests with CI/CD for Continuous Feedback

Description: Embed your automated tests directly into the Continuous Integration/Continuous Delivery (CI/CD) pipeline. This means tests run automatically every time new code is committed, providing immediate feedback on code quality and preventing regressions.

How to Do It: Configure your CI/CD pipeline (e.g., Jenkins, GitHub Actions, GitLab CI/CD, Azure DevOps Pipelines) to trigger unit, integration, and smoke tests upon every code push or pull request.

Example: A developer pushes code for a new feature. The CI pipeline automatically runs unit tests, then API integration tests, and finally a quick UI smoke test. If any fail, the developer is notified instantly, allowing for quick fixes.

Benefit: Maintains code quality, provides instant feedback loops, drastically reduces the time to detect and fix bugs, and builds team confidence in continuous delivery.

Embrace Exploratory Testing for Deeper Insights

Description: While automation excels at repetitive checks, exploratory testing is a powerful technique for discovering new, unscripted bugs and usability issues. It involves testers simultaneously learning about the application, designing tests on the fly, and executing them, often like an end-user would.

How to Do It: – Testers use their intuition and experience to navigate the application creatively. – Try unusual inputs, combine unexpected actions, and explore edge cases that scripted tests might miss. – Focus on “test charters” (e.g., “Explore the checkout process for 30 minutes, looking for usability issues”).

Example: A tester, during an exploratory session, accidentally discovers that if they change their shipping address while a payment is pending, the order gets corrupted – an edge case not covered by typical test scripts.

Benefit: Uncovers usability flaws, visual defects, logical errors, and hidden issues that enhance the overall user experience and product robustness.

Foster Peer Reviews and Collaboration

Description: Encourage a culture of reviewing testing artifacts, including test cases, automation scripts, and even bug reports, among team members. This promotes knowledge sharing and identifies blind spots.

How to Do It: – Use pull requests for automation code, requiring reviews from other QAs or even developers. – Hold short review meetings to walk through critical test cases or test plans. – Ask for feedback on the clarity, reproducibility, or severity of reported bugs. – Practice Pair Testing, where a developer and a QA engineer work together on a feature, combining their perspectives for more comprehensive testing.

Example: A QA engineer reviews another’s automation script and suggests a more robust way to handle dynamic element IDs, improving the script’s stability.

Benefit: Increases the accuracy and completeness of test coverage, reduces individual blind spots, improves overall test quality, and fosters collective ownership.

Manage Test Data Effectively

Description: Access to relevant, reliable, and secure test data is as crucial as the tests themselves. Poor test data can lead to flaky tests or missed defects.

Best Practices: – Generate Realistic Data: Use mock data generators (e.g., Faker.js, Mockaroo) to create diverse and realistic data sets without using sensitive real information. – Sanitize Production Data: If using production data for testing, ensure all Personally Identifiable Information (PII) is anonymized or removed to comply with privacy regulations like GDPR or HIPAA. – Create Reusable Data Sets: Develop and maintain curated test data sets for specific scenarios, especially for regression testing. – Automate Data Setup/Teardown: Integrate test data creation and cleanup into your automation scripts to ensure tests run in a clean, consistent state.

Example: Instead of manually entering credit card details for payment tests, use a data generator to create hundreds of unique, valid, and invalid card numbers for comprehensive testing of the payment gateway.

Benefit: Improves test accuracy, reduces flakiness, ensures test reproducibility, and maintains data security and privacy compliance.

Metrics and Reporting for Quality Assurance

A scalable Test Strategy isn’t complete without the ability to measure its effectiveness and communicate quality status. Metrics help teams understand their progress, identify bottlenecks, and make data-driven decisions for continuous improvement.

Key Quality Metrics to Track

- Defect Density: Number of defects found per unit of code (e.g., per 1000 lines of code, per user story). A lower density indicates higher code quality.

- Defect Escape Rate: Number of defects found in production divided by the total number of defects found. A high escape rate indicates issues with testing effectiveness.

- Test Automation Coverage: Percentage of code or features covered by automated tests. This often includes unit test coverage, API test coverage, and UI test coverage.

- Test Execution Rate/Velocity: Number of tests executed per sprint/day/build.

- Test Pass Rate: Percentage of executed tests that passed.

- Lead Time for Bug Fixes: Time taken from a bug being reported to it being fixed and verified.

- Customer Satisfaction Scores (CSAT/NPS) related to quality: Indirectly reflects the impact of quality on users.

Reporting and Communication

- Transparent Dashboards: Utilize tools like Grafana, Jira Dashboards, or custom reporting solutions to visualize key metrics. These should be accessible to the entire team and stakeholders.

- Sprint Reviews: Present a summary of testing achievements, challenges, and quality status during sprint review meetings.

- Daily Stand-ups: Briefly mention any critical test failures or blocking issues.

- Automated Reports: Configure CI/CD pipelines to generate automated test reports that provide immediate feedback on build quality.

Example: A team tracks their “Defect Escape Rate” and notices it has risen. This prompts a retrospective where they identify a gap in their regression automation, leading them to prioritize adding more automated tests for critical paths.

Common Challenges in Agile Testing (and How to Overcome Them)

Even with a well-defined Agile Test Strategy, teams will encounter obstacles. Here are some common challenges and practical solutions:

| Challenge | Solution |

|---|---|

| Requirements Keep Changing Rapidly | Involve Testers Early & Continuously: Ensure QAs participate actively in backlog refinement and daily stand-ups to stay updated on evolving requirements in real-time. Foster continuous communication with Product Owners. Implement living documentation or collaborative tools (e.g., Confluence) to track changes. |

| Not Enough Time for Testing | Prioritize Automation & Shift Left: Start automating stable and repetitive tests (especially regression tests) early in the development cycle. This frees up manual testing time for new, complex, or exploratory work. Continuously integrate testing into the CI/CD pipeline for rapid feedback. Focus on risk-based testing to prioritize high-impact areas. |

| Poor or Missing Documentation | Foster Collaborative Documentation: Encourage the team to create concise, shared documentation (e.g., acceptance criteria within user stories, architecture diagrams). Use collaborative tools like Confluence, Notion, or Wiki pages. QAs can also contribute by documenting test scenarios and knowledge. |

| Unstable Test Environments | Invest in Robust Environment Management: Use cloud-based testing platforms, Docker containers, or container orchestration tools like Kubernetes to provide consistent, isolated, and easily reproducible environments. Automate environment setup and teardown. Implement clear processes for environment refreshes and data management. |

| Lack of Test Data | Implement a Test Data Management Strategy: Use mock data generators (e.g., Faker.js) or dedicated test data management tools. Sanitize production data for privacy compliance. Automate test data creation and cleanup as part of your test scripts. |

| Automation Tests Are Flaky/Unreliable | Focus on Test Design & Maintenance: Write robust, resilient automation scripts using proper waits and selectors. Isolate tests to run independently. Regularly review and maintain automation suites to address flakiness. Invest in skilled automation engineers and provide training. Implement dedicated time for automation refactoring within sprints. |

| Resistance to Change/Traditional Mindset | Educate and Evangelize: Conduct workshops to explain the benefits of Agile testing principles (e.g., Shift Left, “Quality is Everyone’s Responsibility”). Showcase successes and metrics. Start with small, impactful changes to build momentum and demonstrate value. Get leadership buy-in and support to drive the cultural shift. |

Agile moves fast, but the key is staying in sync with your team, adapting quickly, and proactively addressing these challenges to maintain high quality.

Conclusion: Keep It Simple, Flexible, and Evolving

An Agile Test Strategy is far more than just a document – it’s your team’s living, shared roadmap for consistently delivering high-quality software in an iterative and fast-paced environment. It transforms testing from a standalone phase into an integral part of your entire development process.

Here’s why embracing a robust Agile Test Strategy matters for your team and your product:

- Builds Confidence: With every successful sprint and stable release, your team’s confidence in the product grows, fostering a more productive and innovative environment.

- Ensures Alignment: It keeps everyone from developers and testers to product owners on the same page regarding quality expectations, responsibilities, and processes.

- Adapts and Evolves: By leveraging retrospectives, continuous feedback, and performance metrics, your strategy remains flexible, adapting to new challenges and learning from past experiences.

- Reduces Risk and Cost: By shifting left and catching defects early, you drastically reduce the cost and effort associated with fixing bugs later in the cycle or, worse, in production.

Remember these core tenets when building your strategy:

- Start Small: Don’t try to implement everything at once. Identify the most critical areas for improvement and build upon small successes.

- Keep it Flexible: Your strategy should be a guideline, not a rigid rulebook. It must be able to evolve with your team, product, and market demands.

- Let it Grow with Your Team: Encourage team members to contribute to and refine the strategy, fostering a sense of ownership and collective responsibility for quality.

Your Agile Test Strategy should evolve just like your product does one sprint, one iteration, and one valuable lesson at a time!

Witness how our meticulous approach and cutting-edge solutions elevated quality and performance to new heights. Begin your journey into the world of software testing excellence. To know more refer to Tools & Technologies & QA Services.

If you would like to learn more about the awesome services we provide, be sure to reach out.

Happy Testing 🙂