As digital accessibility becomes increasingly important, many teams incorporate automation tools to check if their website or application is accessible.

To meet this growing need efficiently, many QA teams and developers turn to automation accessibility testing tools. These tools promise to speed up testing, catch compliance issues early, and integrate seamlessly into CI/CD pipelines. Their power lies in their ability to quickly scan large codebases and identify common violations of standards like WCAG.

If you haven’t already, check out our previous blog – Everything You Need to Know About Accessibility Testing – where we debunk common misconceptions and explore why accessibility is much broader than screen readers and visual impairments.

In this blog, we will examine what automation accessibility testing tools promise, what they provide based on real-world testing, and why human judgment will remain a key component of the process. We will also look at the most common tools today – what they do well, where they fail, and how to best use them in your QA process.

- Popular Automation Accessibility Testing Tools

- What Do These Tools Promise?

- Best Practices: Using Automation the Right Way

- Automation Accessibility Tools: What They Catch vs. What They Miss

- Why Manual Accessibility Testing Still Matters

- Conclusion: Why Automation Tools Can’t Replace Manual Accessibility Testing

Popular Automation Accessibility Testing Tools

Let’s break down the most widely used accessibility testing tools, their pros and cons, and where they stand in real-world usage.

Axe by Deque Systems

What It Is:

- One of the most respected and widely used accessibility tools, available as a

- browser extension, CLI tool, or integration for frameworks like Selenium, Cypress, and Playwright.

Promises:

- Accurate WCAG checks

- Seamless CI/CD integration

- Low false positives

- Enterprise-level dashboarding with Axe Monitor

What It Delivers:

- Excellent for spotting basic WCAG 2.1 violations

- Great developer experience

- Doesn’t check alt text meaning or visual clarity

- Needs manual testing for logical flow and interaction

Use Case:

- Use it during development and CI builds. Pair it with manual screen reader testing for best results.

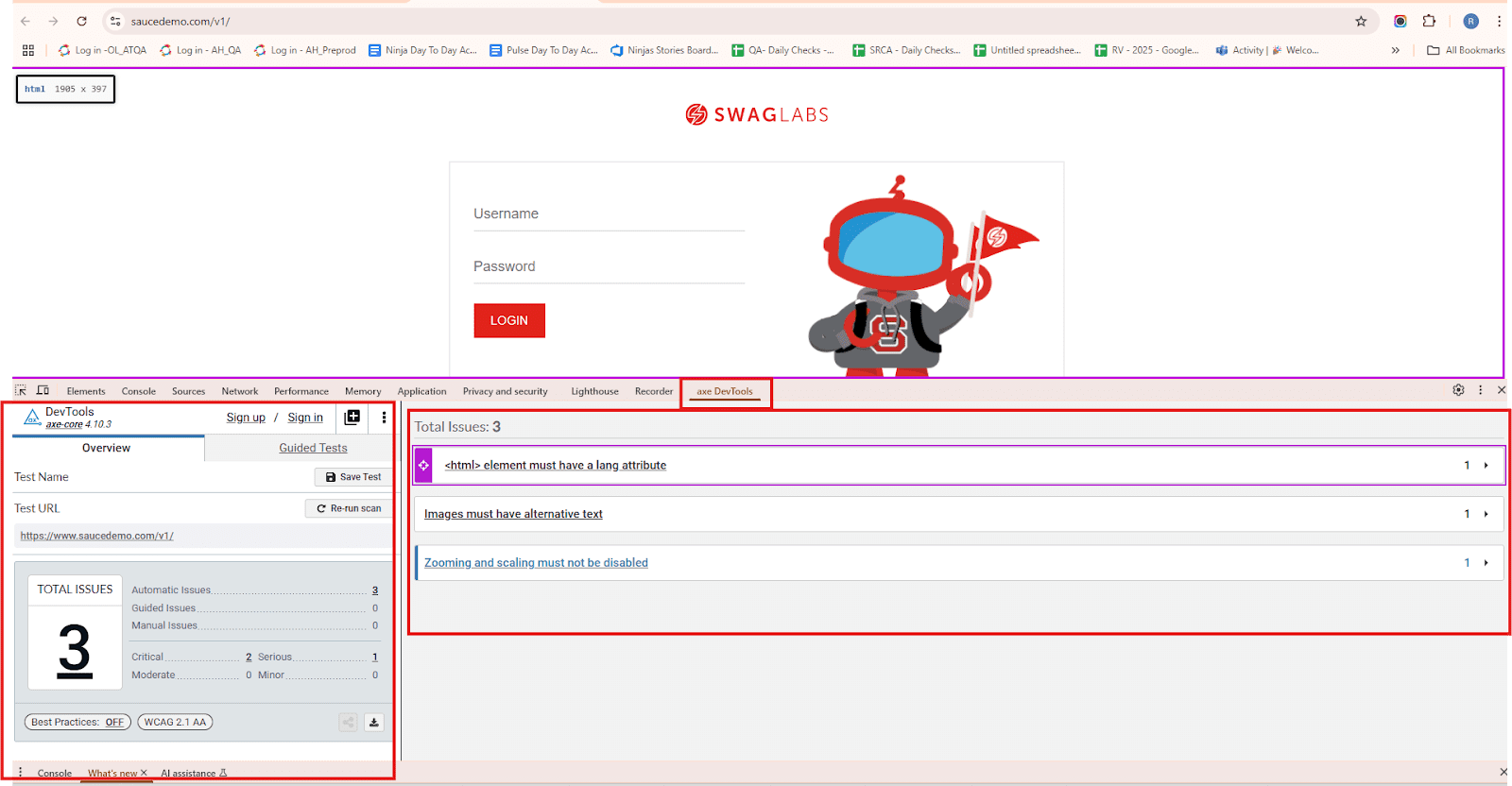

Example (Browser Extension):

- To demonstrate automation accessibility testing, we used Axe DevTools by Deque Systems on the SauceDemo Login Page.

Below is a step-by-step guide with real results and screenshots.

Steps to Run the Tests

- Step 1:Install Axe DevTools

- Install the Axe DevTools Chrome extension.

- Step 2: Open the Website

- Navigate to the target site – in our case: https://www.saucedemo.com/v1/

- Step 3: Open DevTools

- Right-click on the page → Click Inspect or press Ctrl + Shift + I.

- Step 4: Switch to the “axe DevTools” tab

- You’ll find a new Axe DevTools tab inside Chrome DevTools.

- Step 5: Click “Analyze.”

- Axe will begin scanning the page and show a list of accessibility issues it detects.

Test Results:

Here are the actual findings from the SauceDemo login screen using Axe DevTools:

Issues Detected (Total: 3)

- The <html> element must have a lang attribute

- Images must have alternative text (missing alt attribute)

- Zooming and scaling must not be disabled

Screenshot:

Key Takeaway:

Even polished-looking websites can miss critical accessibility attributes. Automation tools like Axe catch these early in development, but for a complete accessibility audit, always complement automation with manual testing (screen readers, keyboard navigation, etc.).

Google Lighthouse

What It Is:

- A free tool built into Chrome DevTools. Runs accessibility audits as part of performance and SEO checks.

Promises:

- Simple, quick accessibility score

- Checklist of key issues

- No installations needed

What It Delivers:

- Quick overview with accessibility score

- Detects low contrast, missing labels, and bad button usage

- Score can be misleading – passing doesn’t mean “fully accessible”

- Limited rule set compared to Axe

Use Case:

- Great for a fast audit or to report improvements. Not reliable for deep testing.

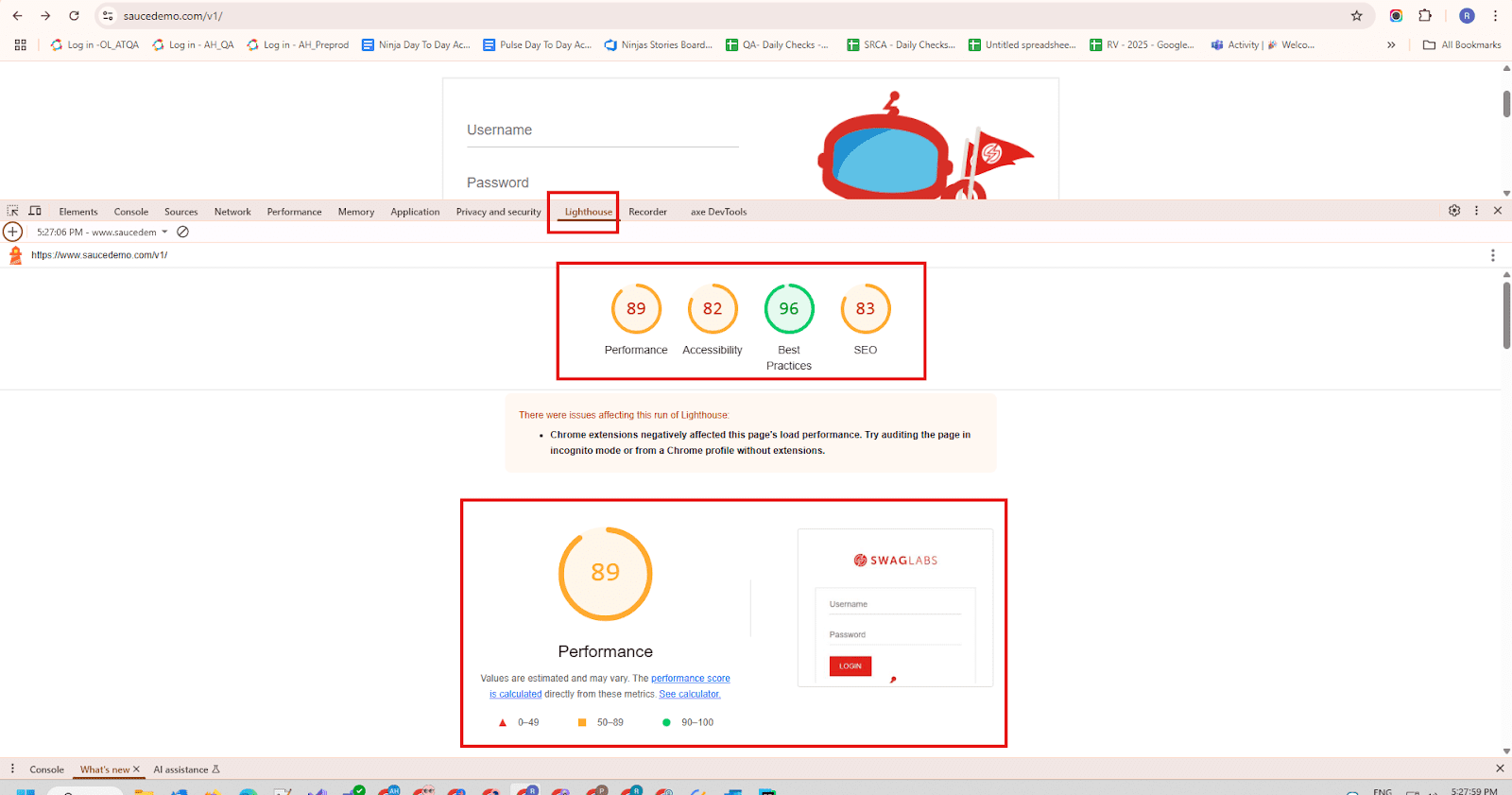

Example (Browser Extension):

- To demonstrate automation accessibility testing, we used Lighthouse on the SauceDemo Login Page.

Below are the step-by-step guide with real results and screenshots.

Steps to Run the Lighthouse Accessibility Test:

- Step 1: Open the Website

- Go to https://www.saucedemo.com/v1/ in Chrome.

- Step 2: Open Chrome DevTools

- Right-click anywhere on the page → Select Inspect or press Ctrl + Shift + I.

- Step 3: Go to the “Lighthouse” tab

- Click on the “Lighthouse” tab inside Chrome DevTools.

- Step 4: Configure the Audit

- Uncheck all categories except Accessibility

- Choose the mode: either Mobile or Desktop

- Click the Analyze page load

- Step 5: View the Report

- After a few seconds, Lighthouse will generate a detailed accessibility report with a score out of 100 and a list of issues.

Test Results:

Accessibility Score: 82 / 100

Common issues detected by Lighthouse:

- Missing alt attributes for meaningful images

- Buttons without accessible names

- Insufficient contrast between background and foreground text

- Form elements not associated with labels

ScreenShot

WAVE by WebAIM

What It Is:

- A visual accessibility evaluation tool. Available as a browser extension and API.

Promises:

- Clear visual feedback on accessibility error

- Educational tool for learning WCAG issues

- API for developers and QA teams

What It Delivers:

- Great for quick visual checks

- Highlights structure and ARIA landmarks

- Doesn’t scale well for large apps or CI/CD

- Can clutter pages and sometimes false-flag

Use Case:

- Ideal for learning and quick audits on smaller pages.

Example (Browser Extension):

- Install the WAVE extension.

- Visit a webpage and click the WAVE icon.

- It overlays icons to show errors like missing alt text or bad ARIA roles.

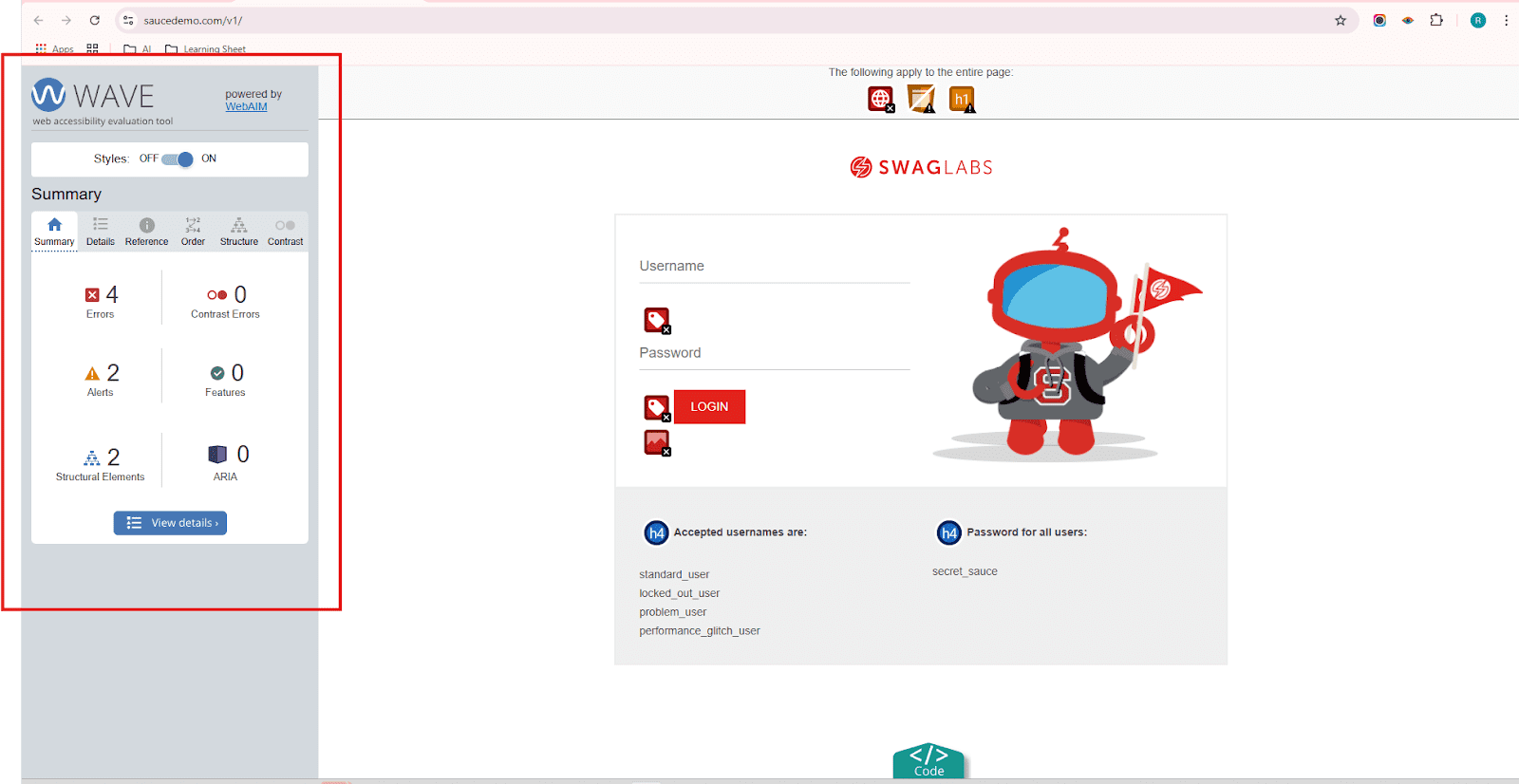

Example:

- To demonstrate manual visual accessibility inspection, we used the WAVE browser extension on the SauceDemo Login Page.

Below is a step-by-step guide along with real test results and optional screenshot areas.

Steps to Run the WAVE Accessibility Test

- Step 1: Install the WAVE Extension

- Go to the Chrome Web Store and install the WAVE Evaluation Tool.

- Step 2: Open the Website

- Navigate to https://www.saucedemo.com/v1/ in your browser.

- Step 3: Launch WAVE

- Click the WAVE extension icon in your browser toolbar.

- WAVE will analyze the page and overlay visual indicators directly on the UI.

- Step 4: Review the Results

- Look for icons on the page:

- Red icons: Accessibility errors

- Yellow icons: Warnings (potential issues)

- Green icons: Structural elements (like ARIA landmarks or heading tags)

- Look for icons on the page:

- Step 5: Use the Sidebar for Details

- The WAVE sidebar provides a categorized list of:

- Errors

- Alerts

- Features

- Structural elements

- Each issue includes:

- Description

- WCAG reference

- HTML snippet

- The WAVE sidebar provides a categorized list of:

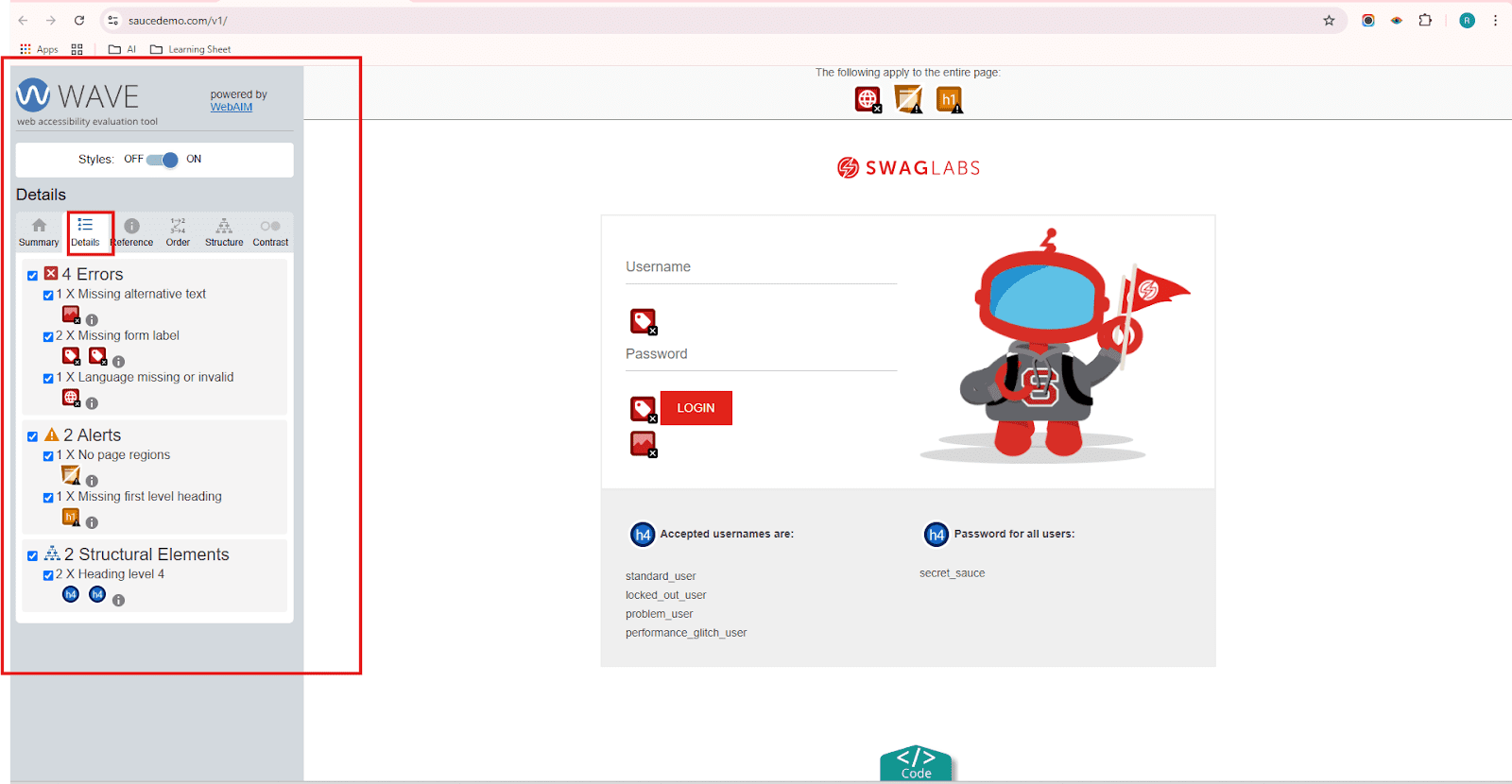

Test Results:

| Category | Count | Description |

| Errors | 4 | – 1× Missing alternative text (on image) – 2× Missing form label (Username & Password fields) – 1× Language missing or invalid (missing lang attribute in <html>) |

| Alerts | 2 | – 1× No page regions (missing semantic landmarks like <main>, <nav>, etc.) – 1× Missing first level heading (<h1> tag not found) |

| Structural Elements | 2 | – 2× Heading level 4 (<h4> used, but no <h1> to <h3> levels) |

Screenshots:

Pa11y

What It Is:

- An open-source command-line tool for running accessibility reports using the Axe engine.

Promises:

- Fast automation tests

- CLI-friendly for DevOps pipelines

- Headless browser testing

What It Delivers:

- Easy to set up in CI/CD

- Covers standard WCAG checks

- Lacks advanced dashboards

- Misses interaction-level issues

Use Case:

- Use in automation test suites to catch regression issues.

Example (Command Line):

- npx pa11y https://example.com

Output:

- Shows a terminal-friendly list of issues like contrast errors, missing labels, etc. Great for CI.

CI/CD Example:

- Add the command to your pipeline YAML to fail builds on accessibility errors.

Example:

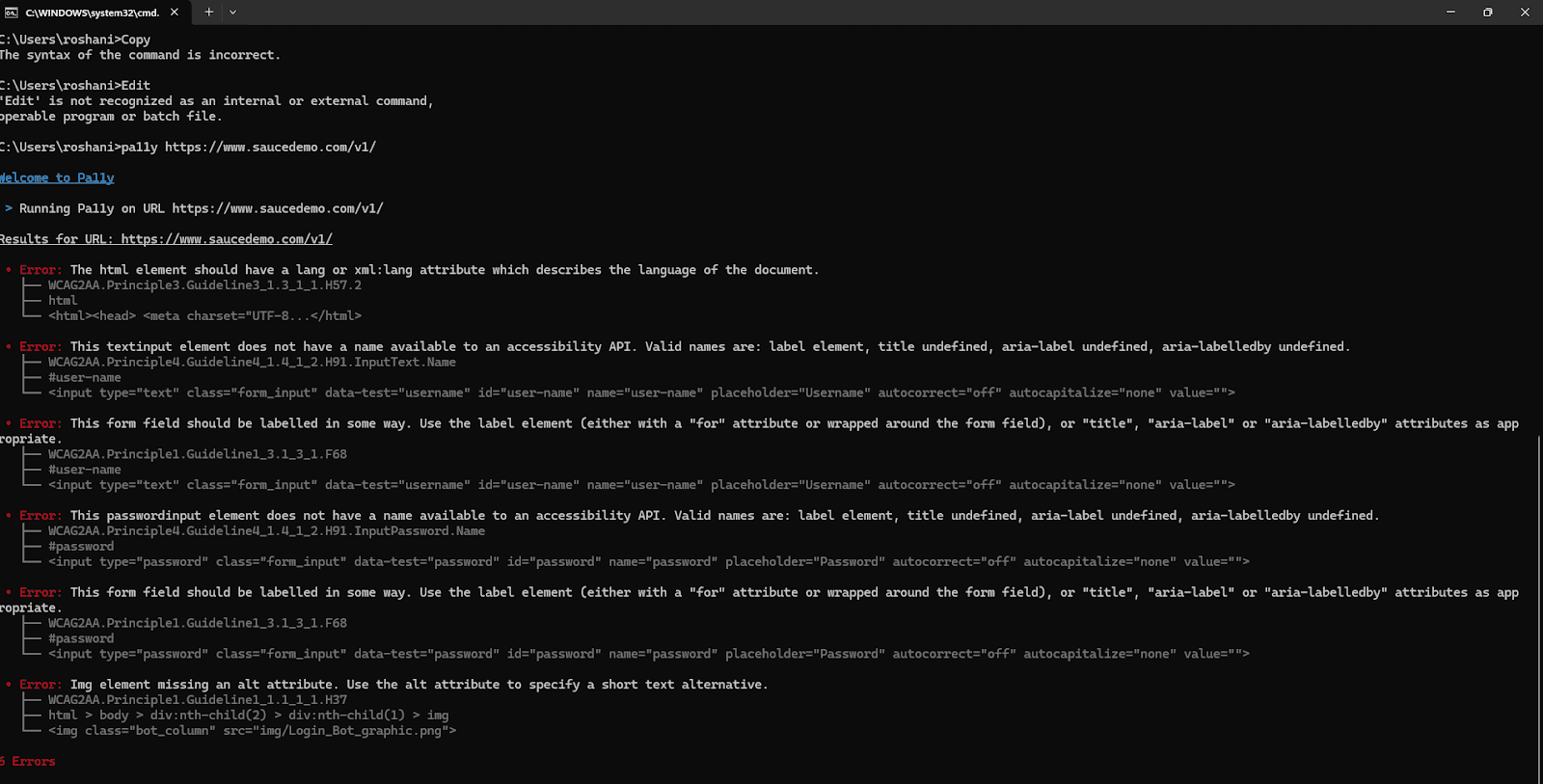

- Tool Used: Pa11y – Command-line accessibility testing tool

- Website Tested: https://www.saucedemo.com/v1/

- Execution Mode: Headless (CLI)

Steps to Run Pa11y

Prerequisites:

- Node.js installed

- Pa11y installed globally via npm: npm install -g pa11y

Run the Test:

pa11y https://www.saucedemo.com/v1/

Test Results (Pa11y Output)

| Issue Type | Count | Description |

| Error | 4 | – Missing alt attribute on the mascot image – No <label> on username/password fields – Missing lang attribute on <html> |

| Warning | 1 | – Improper heading level usage (skipping heading levels directly to <h4>) |

Screenshot:

Accessibility Insights by Microsoft

What It Is:

- A browser extension and Windows tool that tests against WCAG using automation and guided manual checks.

Promises:

- Rich manual testing experience

- Guidance for WCAG compliance

- Integration with Azure Boards

What It Delivers:

- Well-structured guided tests

- Focuses on completeness, not speed

- Slight learning curve

- Not ideal for large-scale regression

Use Case:

- Perfect for exploratory testing with guidance.

Example (Browser Extension):

- Install Accessibility Insights.

- Open your site → Launch the tool from the toolbar.

- Use the FastPass or Assessment mode.

- Get a guided walkthrough for manual checks (keyboard, landmarks, etc.).

Example:

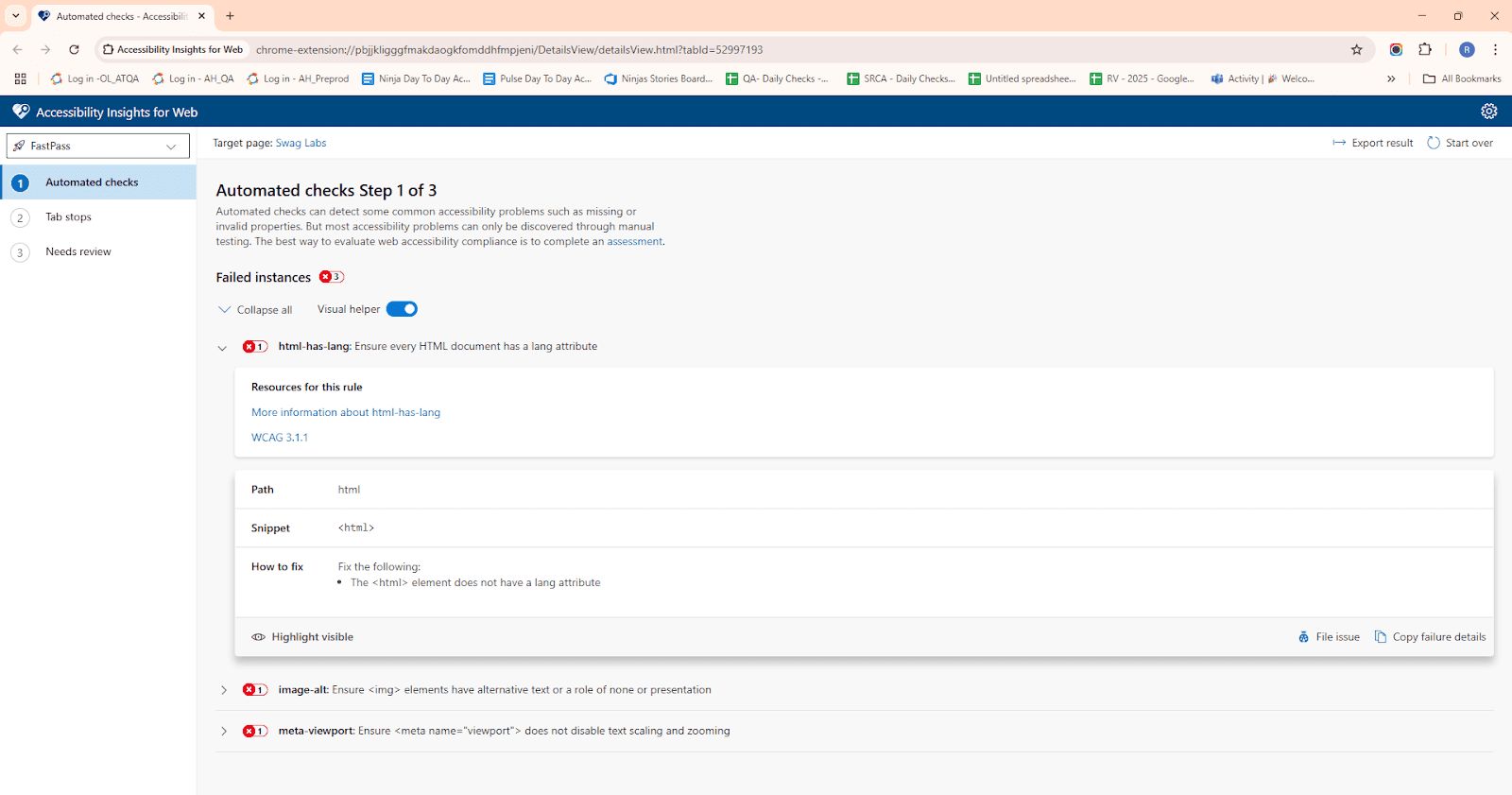

- Tool Used: Accessibility Insights for Web – a Chrome/Edge extension developed by Microsoft

- Website Tested: https://www.saucedemo.com/v1/

- Execution Mode: Manual and Automation (both available)

Steps to Run Accessibility Insights Test

- Step 1: Install the Extension

- Download the Accessibility Insights for Web extension from the Chrome Web Store or the Microsoft Edge Add-ons store.

- Step 2: Open the Target Website

- Navigate to https://www.saucedemo.com/v1/ in Chrome or Edge.

- Step 3: Launch Accessibility Insights

- Click the Accessibility Insights icon in the browser toolbar → Select “FastPass” (automation test) or “Assessment” (detailed manual test).

- Step 4: Run FastPass

- FastPass includes:

- Automation checks (using axe-core)

- Tab stops visualization

- Click “Start” to run the test.

- FastPass includes:

Test Results:

| Issue Category | Count | Example |

| Automation Check Failures | 3 | – Image missing alt attribute – Inputs missing <label> – Missing lang on <html> |

| Tab Stops Visualization | 0 | – Shows tab order is present, but inputs lack labels, affecting screen reader usability |

Screenshot:

Get Professional Accessibility QA Support

Need help implementing these accessibility tools effectively? Ensure your website is fully accessible and compliant with expert guidance.

What Do These Tools Promise?

Automation accessibility testing tools are often marketed as powerful, all-in-one solutions for achieving digital accessibility compliance. Their key promises include:

Automation Detection of WCAG Violations

Most tools claim they can automatically detect violations against the WCAG (Web Content Accessibility Guidelines) as applied to your entire website or application. They often promote near-instant coverage across WCAG 2.1 levels A, AA, and even AAA, suggesting comprehensive issue identification with minimal manual intervention.

Quick Identification of All Accessibility Issues

One of the strongest selling points is speed – tools often claim to detect all major accessibility issues within seconds. With just a click or a build trigger, they promise to uncover everything from missing alt text to improper heading structures and color contrast failures.

Elimination of Manual Testing

Some tools suggest that an automated scan is sufficient to meet your compliance standards. Therefore, some tools will advocate reduced or even no need for manual accessibility testing performed by experts or users with disabilities.

Seamless Integration with CI/CD Pipelines

Modern accessibility tools are often built into the CI/CD (Continuous Integration/Continuous Deployment) process. The marketing often touts that the automation tests can be run effortlessly every time there is a commit or build, so it can be flagged earlier in the development cycle, and keep accessibility at the front of developers’ minds going forward.

But What’s the Reality?

- Most automation tools get 30%-50% of the actual accessibility issues identified.

- They are excellent at identifying code-level violations (like missing ARIA attributes or improper form labeling).

- However, they cannot assess visual context, user intent, or interaction-based issues, like whether a modal trap exists, if link text is meaningful, or if a screen reader user can navigate intuitively.

- Automation tools also struggle with dynamic content, custom components, and JavaScript-heavy UIs where accessibility is related to behavior and not just markup.

Best Practices: Using Automation the Right Way

Automation is powerful, but when used in the right context. Here’s how to blend automation tools with human insight for maximum coverage and usability.

Use Automation Tools for Speed and Coverage

- Quickly catch common WCAG violations (e.g., missing labels, color contrast)

- Ideal for early development and CI pipelines

- Helps prevent regressions by catching known issues consistently

Rely on Manual Testing for Real User Scenarios

- Validate keyboard navigation, focus order, and screen reader flow

- Confirm meaningful alt text, headings, and context

- Detect subtle issues like confusing forms, popups, or live content changes

Educate Your Entire Team

- Accessibility is not just a QA task – it involves developers, designers, and product owners

- Encourage using accessible components and semantic HTML from the start

- Share insights from manual testing in retrospectives and grooming sessions

Don’t Rely on “Green Reports” as a Final Verdict

- A passing score from a tool doesn’t mean the experience is accessible

- Tools don’t understand intent, empathy, or task completion

- Always follow up with a real human evaluation

Combine Tools + Manual Testing Strategically

| Project Stage | What to Use | Why It Works |

| Development | axe DevTools, Google Lighthouse | Fast, inline checks to catch issues early |

| QA Testing | axe CLI, Pa11y, Accessibility Insights (manual) | Combines automation with guided manual testing for full coverage |

| UAT / Final Testing | NVDA, JAWS, VoiceOver, keyboard-only navigation | Real-world testing to verify usability for assistive tech users |

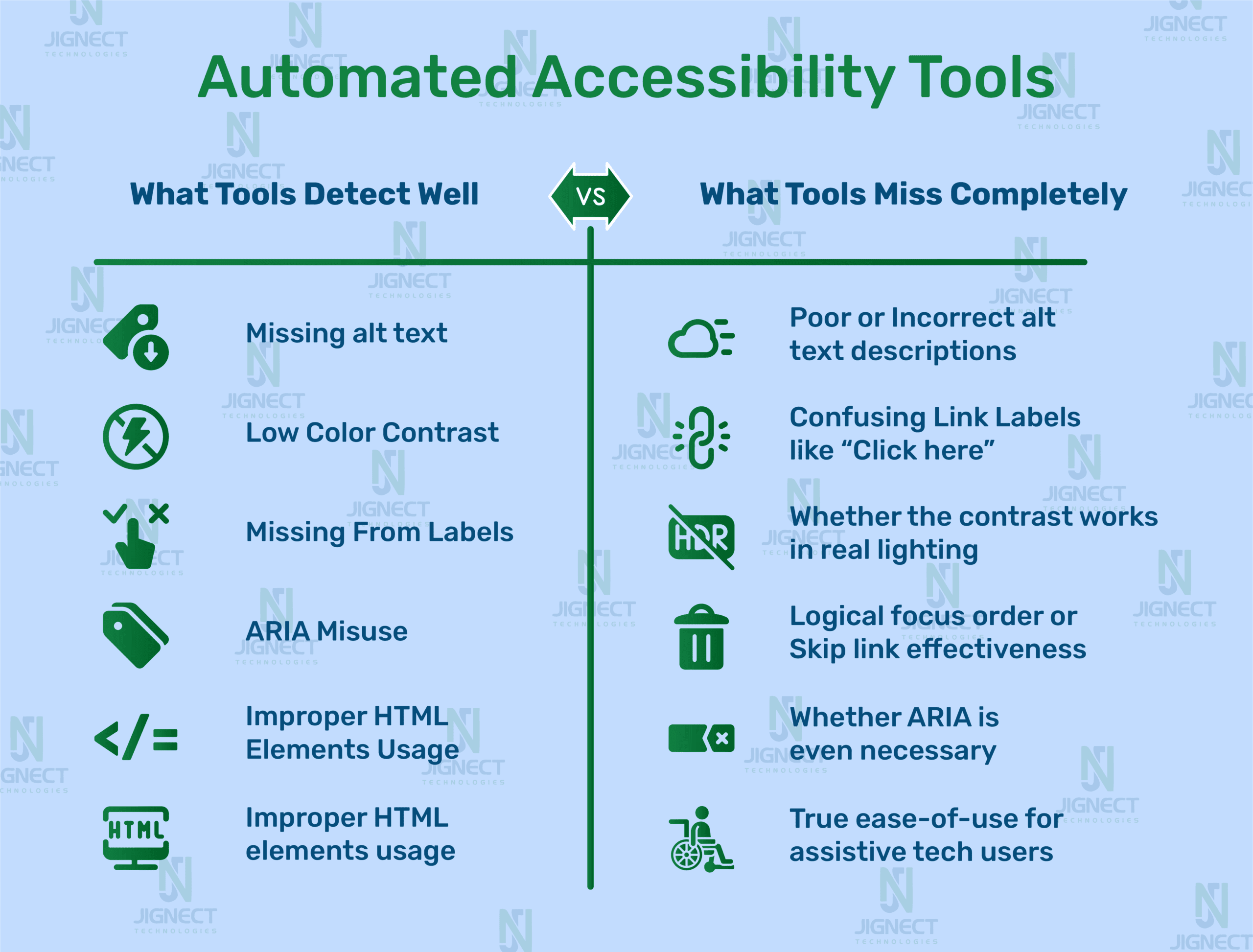

Automation Accessibility Tools: What They Catch vs. What They Miss

| What Tools Typically Detect Well | What Tools Commonly Overlook or Misjudge |

| Missing alt attributes on <img> tags | Inadequate or misleading alt text, like “image123” or irrelevant descriptions |

| Incorrect semantic elements (e.g., using <div> instead of <button>) | Ambiguous link/button text, like “Click here” or “Read more,” without context |

| Insufficient color contrast ratios | Real-world contrast issues, such as glare or color conflicts, under different lighting conditions |

| Missing or empty form labels | Unclear or vague labels, such as “Field” or unlabeled form sections that confuse screen reader users |

| Keyboard traps or lack of keyboard access | Logical tab order, meaningful grouping, or presence and usefulness of “Skip to content” links |

| Misuse of ARIA roles or attributes | Unnecessary or redundant ARIA usage, or lack of awareness that native HTML might suffice |

| Incorrect or missing landmark roles | True navigability for screen reader users – i.e., how intuitive and effective the landmarks feel in use |

| Non-descriptive button labels, like <button></button> | Contextual relevance, e.g., whether a button’s label explains what action it performs |

| Presence of focus indicators | Visibility and clarity of focus indicators in real usage scenarios |

| Use of headings out of order (e.g., h3 after h1) | Document structure clarity, such as whether headings provide a meaningful content hierarchy |

Why Manual Accessibility Testing Still Matters

While automation checkers like Axe, Lighthouse, and WAVE are great for identifying the easy and cursory accessibility issues, they only address part of the issue. Here’s what they cannot do – this is why human judgment is imperative and irreplaceable:

Determine If Alt Text Is Meaningful

Automation tools can identify missing alt attributes, but they cannot identify if the description conveys useful content.

For instance:

- “Portrait of Dr. Jane Smith, Nobel Laureate” → Useful

- “image123.jpg” or picture → Useless

A human being is the only one who can identify if the image was meaningful content or decorative, and whether the alt text achieves that purpose.

Assess Logical Focus Order and Navigation Flow

Tools can confirm keyboard access, but they can’t assess whether the tab order makes sense.

Humans test:

- Is it possible to navigate from search to results without jumping around the page?

- Is there a visible focus indicator that you can see?

Logical flow is critical for users relying on a keyboard or assistive tech, and only manual testing can fully validate it.]

Understand Complex Interactions

Components like modals, dynamic dropdowns, sliders, and popovers can behave unpredictably:

- Does focus stay inside the modal while it’s open?

- Can you close it using only the keyboard?

- Is ARIA used appropriately, or is it misapplied?

Automation tools may not fully grasp interaction nuances, especially when JavaScript behaviors are involved.

Simulate Real Screen Reader Experiences

Automation tools don’t use screen readers – humans do.

Manual testing helps answer:

- Can a screen reader user complete a task?

- Is the narration logical, or is it confusing?

- Are landmarks and headings truly helpful?

Only a manual test can confirm if the experience is navigable, informative, and task-completable.

Sense Confusion, Delight, or Frustration

Accessibility is not just about compliance – it’s about usability and dignity.

Automation tools can’t measure:

- Is the experience pleasant or frustrating?

- Are error messages clear and timely?

- Can someone complete the task without asking for help?

These insights come only from real human experience – whether it’s a tester, a user with a disability, or a QA professional.

Manual testing adds empathy and human experience to the process – something no tool can automate.

Conclusion: Why Automation Tools Can’t Replace Manual Accessibility Testing

Automation accessibility tools are invaluable allies. They speed up audits, catch repetitive code issues, and fit seamlessly into modern DevOps pipelines. They help you do more, faster – but they can’t do it all.

No matter how advanced a tool claims to be, it can’t judge meaning, can’t feel frustration, and can’t guarantee usability for someone navigating your app with a screen reader or only a keyboard.

True accessibility demands a human perspective.

It requires manual testing, empathy, and sometimes even direct feedback from people with disabilities. That’s how you uncover real barriers and craft experiences that are not just compliant, but welcoming and usable.

Remember:

Automation helps you check the rules.

Manual testing helps you serve the people.

Witness how our meticulous approach and cutting-edge solutions elevated quality and performance to new heights. Begin your journey into the world of software testing excellence. To know more refer to Tools & Technologies & QA Services.

If you would like to learn more about the awesome services we provide, be sure to reach out.

Happy Testing 🙂