In the field of QA automation, test reporting is an important process that helps connect technical and non-technical team members. It ensures everyone can understand the results of automated tests, making the process more transparent and reliable.

Behavior-Driven Development (BDD) frameworks, such as SpecFlow, have become popular because they encourage collaboration between developers, testers, and business stakeholders. To make the most of these frameworks, adding detailed and clear test reports is essential. Good test reporting provides easy-to-read information about test results, helps identify issues, and ensures accountability within the team.

In this blog, we will explore the basics of test reporting in C# and its importance in BDD frameworks. We will also guide you through setting up popular reporting tools like LivingDoc, ExtentReports, and Allure Reports to enhance your automation testing process.

Why Test Reporting Is Crucial for QA Automation

Test reporting is an essential practice in QA automation because it helps teams clearly understand and communicate the results of automated tests. It offers a structured way to collect, document, and share this information in a meaningful way. Below are some key reasons why test reporting is so important:

- Traceability: Test reports link test results to specific requirements or user stories. This ensures that every test conducted is directly tied to a business goal or functionality, helping teams track whether the software meets the specified needs.

- Transparency: A well-structured test report provides an overview of the entire testing process. It shows which tests passed and which ones failed, helping teams quickly identify what is working and what needs attention. This transparency helps all stakeholders stay informed.

- Collaboration: Test reports improve communication between developers, testers, and other stakeholders by providing a common understanding of the test results. It enables everyone to discuss issues and solutions in a more structured and informed way, promoting teamwork.

- Accountability: When a test fails, the report clearly shows which part of the software or system failed, making it easier for teams to take ownership and address the issue. This helps hold the development and testing teams accountable for fixing problems.

- Continuous Improvement: Test reports provide valuable data that can be used to identify patterns or recurring issues. This information helps teams make data-driven decisions to improve the quality of the software, leading to more efficient development cycles and better product quality over time.

The Pros and Cons of Skipping Test Reporting in Automation

- Advantages of Not Having Test Reporting:

- Simplicity: No need for additional configurations or tools, allowing teams to focus solely on running tests.

- Faster Execution: Eliminates delays caused by report generation, beneficial for short-term testing cycles.

- Disadvantages of Not Having Test Reporting:

- Lack of Visibility: Teams and stakeholders have no clear understanding of the current status of the test suite.

- Poor Collaboration: Non-technical stakeholders are left out of the loop and cannot easily track progress.

- Debugging Challenges: Without traceable evidence, diagnosing and fixing failed tests becomes difficult.

- Missed Trends: Absence of historical data prevents identification of recurring issues or bottlenecks, hindering continuous improvement.

Set-Up Basic BDD Framework in C#

To start integrating test reporting in C#The first thing needed is a basic BDD framework, such as SpecFlow. This will allow us to write tests in a simple, readable format. Once the BDD framework is set up and tests are running, we can then integrate a test reporting tool. The reporting tool will capture and display test results, making it easier to analyze performance, track progress, and communicate results with stakeholders.

For a better understanding of how to set up a BDD project, including what a feature file is, what step definition files are, and how to structure your BDD framework, you can refer to our blog on Test Automation with BDD, SpecFlow, and Selenium. This will provide you with detailed guidance on setting up and using the framework effectively.

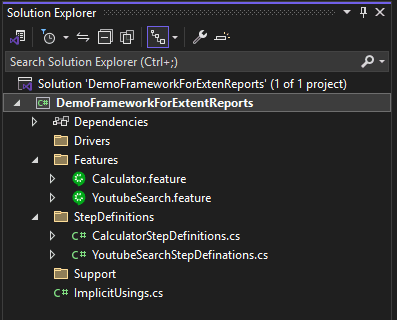

Once you’ve set up the C# BDD framework and added some basic tests based on your requirements your project structure should look like this after adding the feature file and step definitions file.

Project Structure:

YoutubeSearch.feature feature file:

Code:

Feature: YoutubeSearch

Search JigNect Technologies on Youtube.

@Jignect

Scenario: Searching JigNect Technologies on Youtube

Given Open the browser

When Search the Jignect Technolgies in the Youtube URL

Then The JigNect Technologies channel logo should be displayed on the pageYoutubeSearchStepDefinations.cs file:

Code :

using OpenQA.Selenium;

using OpenQA.Selenium.Chrome;

namespace DemoFrameworkForExtenReports.StepDefinitions

{

[Binding]

public sealed class YoutubeSearchStepDefinations

{

private IWebDriver _driver;

public YoutubeSearchStepDefinations(IWebDriver driver)

{

_driver = driver;

}

[Given(@"Open the browser")]

public void GivenOpenTheBrowser()

{

_driver = new ChromeDriver();

_driver.Manage().Window.Maximize();

}

[When(@"Search the Jignect Technolgies in the Youtube URL")]

public void WhenEnterTheURL()

{

_driver.Url = "https://www.youtube.com/";

Thread.Sleep(3000);

}

[Then(@"The JigNect Technologies channel logo should be displayed on the page")]

public void ThenSearchForTheTestersTalk()

{

_driver.FindElement(By.XPath("//*[@name='search_query']")).SendKeys(" Jignect Technologies ");

_driver.FindElement(By.XPath("//*[@name='search_query']")).SendKeys(Keys.Enter);

Thread.Sleep(5000);

_driver.Quit();

}

}

}

The next step is to integrate test reporting into the framework. This will allow us to capture and display the results of tests in a clear and organized way. We will look into the process of integrating a reporting tool and learn how to easily track and analyze test results.

Various Types of Test Reporting

There are several methods to integrate test reporting in C#. We will explore each of these methods one by one and learn how to implement them practically.

Using LivingDoc.HTML Report :

LivingDoc is a reporting tool specifically designed to generate HTML reports from the SpecFlow tests. It visualizes the Gherkin feature files, step definitions, and test execution results in an easy-to-understand format. The reports can be shared with both technical and non-technical stakeholders, giving them an interactive view of the tests and their outcomes.

LivingDoc provides features such as:

- Detailed Test Execution: A summary of all executed scenarios.

- Traceability: Links between Gherkin features, step definitions, and test execution.

- Real-time Updates: As tests run, the report gets updated to reflect the current state of the tests.

Steps to Integrate LivingDoc.HTML Report in C# :

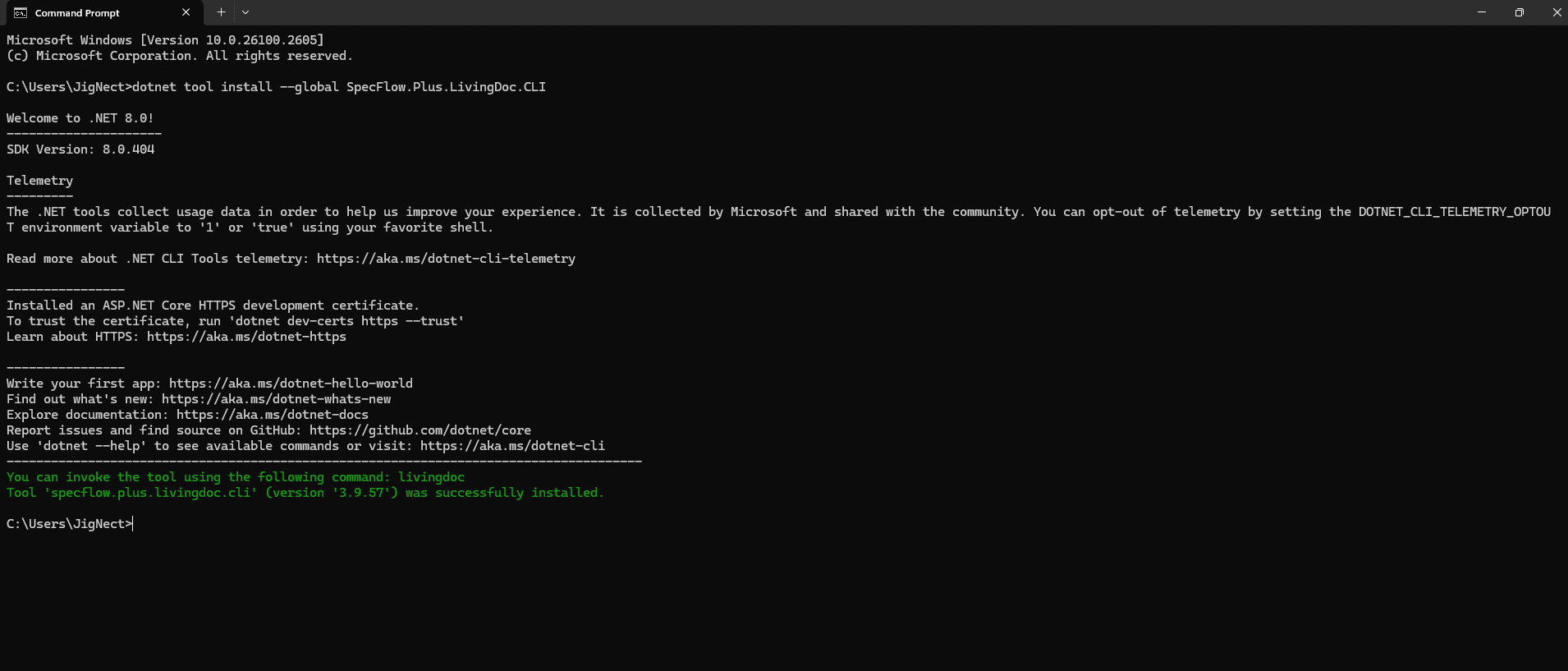

First, let’s install the LivingDoc socket on your local system using the command prompt. Simply enter the following command and press Enter to install it:

Code : dotnet tool install –global SpecFlow.Plus.LivingDoc.CLI

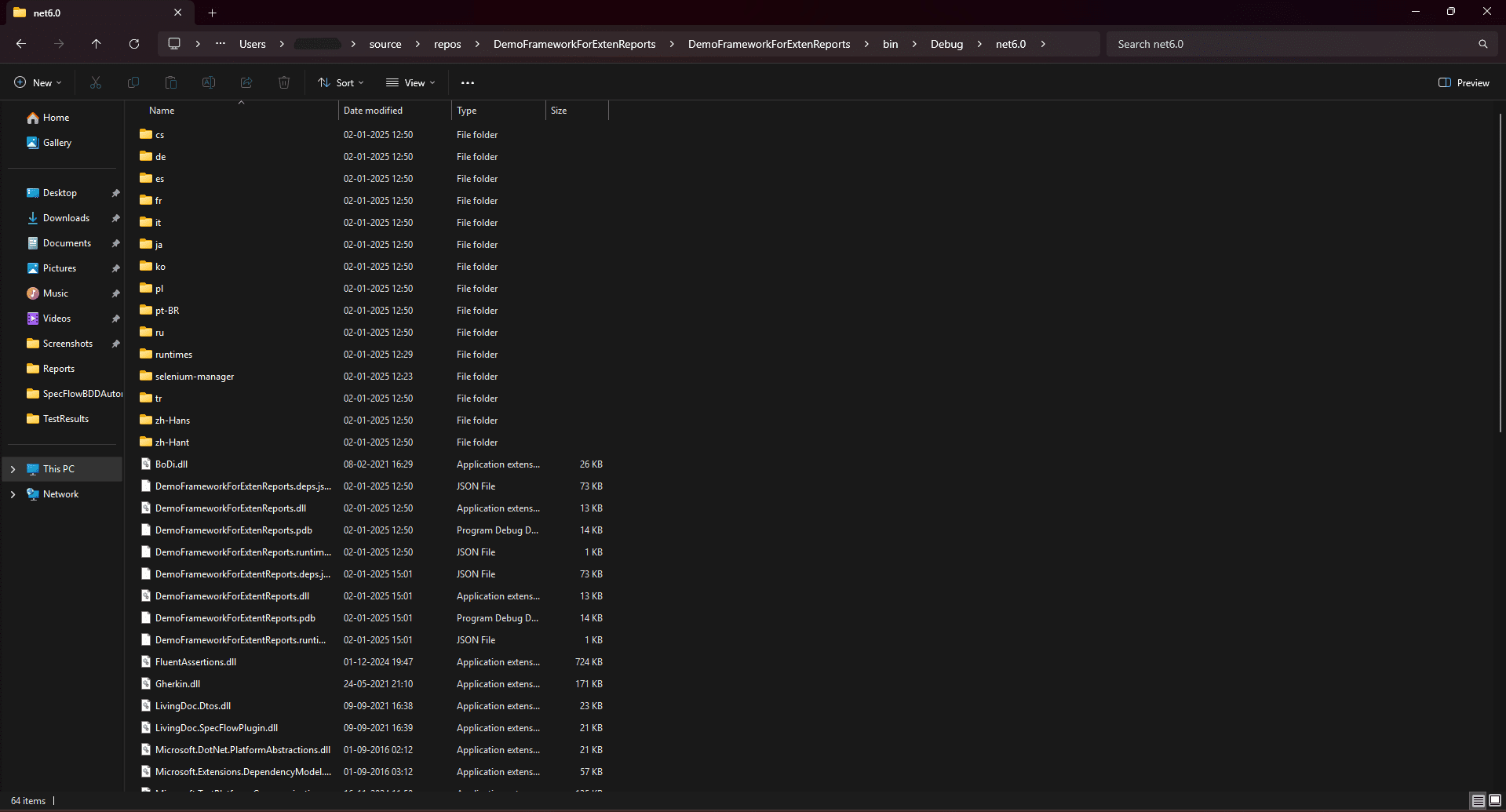

Now, just navigate to the path where your SpecFlow project is located. In this example, the solution was set up in the C:\work folder for example: C:\Users\rushabh\source\repos\DemoFrameworkForExtenReports\DemoFrameworkForExtenReports\bin\Debug\net6.0

Next, we need to navigate to this folder using the “cd” command, as shown in the screenshot below for reference.

The final step is to run the following command to generate the LivingDoc.html report:

Code : livingdoc test-assembly PROJECT_NAME.dll -t TestExecution.json(Replace PROJECT_NAME with the name of your project.)

After running this command, a LivingDoc report will be generated in the specified folder. Upon opening the report, you’ll be able to view a detailed test summary, including the status of each test whether it passed, failed, or was not executed. The report presents all the test cases and their steps in a clean, organized format, making it easy to analyze the results.

Integrating LivingDoc.HTML Report with SpecFlow in a C# project allows you to generate interactive, detailed, and shareable reports for your BDD tests. It improves the collaboration between teams and stakeholders, making it easier to track and analyze test execution.

By following the steps outlined in this blog, you can quickly set up LivingDoc in your C# project and start benefiting from its powerful reporting features.

Using ExtentReports Integration

Extensive Reports are a versatile test reporting library for .NET and Java that generates interactive and detailed HTML reports. These reports include test execution summaries, logs, screenshots, and more, making it a valuable tool for test automation.

Key Features of Extent Reports:

- Customizable Design: Tailor the report’s look and feel to match your needs.

- Interactive Logs: Add detailed logs, screenshots, and step-by-step information for easy traceability.

- Real-Time Updates: Generate and view reports while tests are running.

Why Use Extent Reports with SpecFlow?

SpecFlow, a leading BDD framework for C#, integrates seamlessly with Extent Reports, offering significant advantages:

- Clear Visualization: View test results, scenarios, and steps in a well-structured and visually appealing format.

- Enhanced Debugging: Easily trace failures with rich logs and embedded screenshots.

- Stakeholder-Friendly Reports: Share intuitive, user-friendly reports with both technical and non-technical team members.

By combining the power of Extent Reports with SpecFlow, let us see how we can create comprehensive and accessible test reports that streamline debugging and improve collaboration across teams.

Steps to Integrate Extent Report in C# :

To integrate Extent Reports in C#, start by adding its NuGet package and installing the latest stable version. So, for that just right click on dependencies folder>Manage NuGet Packages and from their search Extent reports and install the package. Just refer the below attached snippets for your better reference.

After installing the NuGet package, the next step is to create a TestResults folder in your project.

Next, I created a hooks class to handle browser setup and teardown. Using hooks, I launch the browser before the tests and close it afterward. Below is the hooks code for reference.

Code:

using BoDi;

using OpenQA.Selenium.Chrome;

using OpenQA.Selenium;

namespace DemoFrameworkForExtentReports.Hooks

{

[Binding]

public sealed class Hooks

{

private readonly IObjectContainer _container;

public Hooks(IObjectContainer container)

{

_container = container;

}

[BeforeScenario("@Jignect")]

public void BeforeScenarioWithTag()

{

Console.WriteLine("Running inside tagged hooks in a specflow");

}

[BeforeScenario(Order = 1)]

public void FirstBeforeScenario()

{

IWebDriver driver = new ChromeDriver();

driver.Manage().Window.Maximize();

_container.RegisterInstanceAs<IWebDriver>(driver);

}

[AfterScenario]

public void AfterScenario()

{

var driver = _container.Resolve<IWebDriver>();

if (driver != null)

{

driver.Close();

}

}

}

}Also after adding the Hooks.cs file we need to update the code in the YoutubeSearchStepDefinations.cs file by commenting out the browser launch and teardown logic, as shown in the code snippet below.

Update code of YoutubeSearchStepDefinations.cs :

using OpenQA.Selenium;

namespace DemoFrameworkForExtenReports.StepDefinitions

{

[Binding]

public sealed class YoutubeSearchStepDefinations

{

private IWebDriver _driver;

public YoutubeSearchStepDefinations(IWebDriver driver)

{

_driver = driver;

}

[Given(@"Open the browser")]

public void GivenOpenTheBrowser()

{

//_driver = new ChromeDriver();

//_driver.Manage().Window.Maximize();

}

[When(@"Search the Jignect Technolgies in the Youtube URL")]

public void WhenEnterTheURL()

{

_driver.Url = "https://www.youtube.com/";

Thread.Sleep(3000);

}

[Then(@"The JigNect Technologies channel logo should be displayed on the page")]

public void ThenSearchForTheTestersTalk()

{

_driver.FindElement(By.XPath("//*[@name='search_query']")).SendKeys(" Jignect Technologies ");

_driver.FindElement(By.XPath("//*[@name='search_query']")).SendKeys(Keys.Enter);

Thread.Sleep(5000);

//_driver.Quit();

}

}

}The next step is to create an ExtentReport.cs class in the project, where we will implement the logic for generating Extent Reports in C#.

Now, we will incorporate the logic for generating Extent Reports into our code and break it down for better understanding. This involves setting up the necessary components to create detailed, interactive test reports and using them effectively in the test automation framework. Let’s understand the code.

Code:

using AventStack.ExtentReports;

using AventStack.ExtentReports.Reporter;

using AventStack.ExtentReports.Reporter.Configuration;

using OpenQA.Selenium;

namespace DemoFrameworkForExtentReports.Utility

{

public class ExtentReport

{

public static ExtentReports _extentReports;

public static ExtentTest _feature;

public static ExtentTest _scenario;

public static String dir = AppDomain.CurrentDomain.BaseDirectory;

public static String testResultPath = dir.Replace("bin\\Debug\\net6.0", "TestResults");

public static void ExtentReportInit()

{

var htmlReporter = new ExtentHtmlReporter(testResultPath);

htmlReporter.Config.ReportName = "Automation Status Report";

htmlReporter.Config.DocumentTitle = "Automation Status Report";

htmlReporter.Config.Theme = Theme.Standard;

htmlReporter.Start();

_extentReports = new ExtentReports();

_extentReports.AttachReporter(htmlReporter);

_extentReports.AddSystemInfo("Application", "Youtube");

_extentReports.AddSystemInfo("Browser", "Chrome");

_extentReports.AddSystemInfo("OS", "Windows");

}

public static void ExtentReportTearDown()

{

_extentReports.Flush();

}

public string addScreenshot(IWebDriver driver, ScenarioContext scenarioContext)

{

ITakesScreenshot takesScreenshot = (ITakesScreenshot)driver;

Screenshot screenshot = takesScreenshot.GetScreenshot();

string screenshotLocation = Path.Combine(testResultPath, scenarioContext.ScenarioInfo.Title + ".png");

screenshot.SaveAsFile(screenshotLocation);

return screenshotLocation;

}

}

}

This code sets up Extent Reports for test reporting in a Selenium-based framework. Here’s a simplified explanation:

- Namespaces and Class Setup:

- The code uses AventStack.ExtentReports to create and manage test reports.

- OpenQA.Selenium is used for interacting with the browser and capturing screenshots during test execution.

- Variables:

- _extentReports: An object for managing the extent report.

- _feature and _scenario: Used to log the status of features and scenarios in the report.

- dir: The base directory path for the project.

- testResultPath: Path where the test results (reports) will be saved.

- ExtentReportInit():

- Initializes the Extent Report by setting up an HTML reporter with the specified configurations (like report name, title, and theme).

- It attaches the reporter to _extentReports and adds system information such as application name, browser, and OS.

- ExtentReportTearDown():

- Finalizes the report by calling the Flush() method to ensure all data is written to the report file.

- addScreenshot():

- Captures a screenshot during test execution using the ITakesScreenshot interface from Selenium.

- Saves the screenshot in the specified folder with the scenario’s title as the filename and returns the screenshot location.

- Adding screenshots helps save time for the team by automatically capturing the screenshot of a failed step whenever a test case fails. This screenshot is then included in the Extent Report, making it easier to analyze and debug the failure without needing to reproduce the issue manually.

In short, this class manages the initialization and teardown of Extent Reports, adds system information, and captures screenshots for failed scenarios.

After adding the logic for integrating and creating Extent Reports in the ExtentReport.cs file, the next step is to call the relevant methods from the Hooks.cs file. In this file, we will utilize the following hooks:

- BeforeTestRun: To initialize the Extent Reports before any tests are run.

- AfterTestRun: To finalize and flush the report after all tests have completed.

- BeforeFeature: To log information before each feature starts.

- AfterFeature: To log information after each feature has finished.

- AfterStep: To log the results of each individual step in the scenario.

Updated Code of Hooks.cs file :

using BoDi;

using OpenQA.Selenium.Chrome;

using OpenQA.Selenium;

using DemoFrameworkForExtentReports.Utility;

using AventStack.ExtentReports.Gherkin.Model;

using AventStack.ExtentReports;

namespace DemoFrameworkForExtentReports.Hooks

{

[Binding]

public sealed class Hooks : ExtentReport

{

private readonly IObjectContainer _container;

public Hooks(IObjectContainer container)

{

_container = container;

}

[BeforeTestRun]

public static void BeforeTestRun()

{

Console.WriteLine("Running before test run...");

ExtentReportInit();

}

[AfterTestRun]

public static void AfterTestRun()

{

Console.WriteLine("Running after test run...");

ExtentReportTearDown();

}

[BeforeFeature]

public static void BeforeFeature(FeatureContext featureContext)

{

Console.WriteLine("Running before feature...");

_feature = _extentReports.CreateTest<Feature>(featureContext.FeatureInfo.Title);

}

[AfterFeature]

public static void AfterFeature()

{

Console.WriteLine("Running after feature...");

}

[BeforeScenario("@Jignect")]

public void BeforeScenarioWithTag()

{

Console.WriteLine("Running inside tagged hooks in a specflow");

}

[BeforeScenario(Order = 1)]

public void FirstBeforeScenario(ScenarioContext scenarioContext)

{

IWebDriver driver = new ChromeDriver();

driver.Manage().Window.Maximize();

_container.RegisterInstanceAs<IWebDriver>(driver);

_scenario = _feature.CreateNode<Scenario>(scenarioContext.ScenarioInfo.Title);

}

[AfterScenario]

public void AfterScenario()

{

var driver = _container.Resolve<IWebDriver>();

if (driver != null)

{

driver.Close();

}

}

[AfterStep]

public void AfterStep(ScenarioContext scenarioContext)

{

Console.WriteLine("Running after step....");

string stepType = scenarioContext.StepContext.StepInfo.StepDefinitionType.ToString();

string stepName = scenarioContext.StepContext.StepInfo.Text;

var driver = _container.Resolve<IWebDriver>();

//When scenario passed

if (scenarioContext.TestError == null)

{

if (stepType == "Given")

{

_scenario.CreateNode<Given>(stepName);

}

else if (stepType == "When")

{

_scenario.CreateNode<When>(stepName);

}

else if (stepType == "Then")

{

_scenario.CreateNode<Then>(stepName);

}

else if (stepType == "And")

{

_scenario.CreateNode<And>(stepName);

}

}

//When scenario fails

if (scenarioContext.TestError != null)

{

if (stepType == "Given")

{

_scenario.CreateNode<Given>(stepName).Fail(scenarioContext.TestError.Message,

MediaEntityBuilder.CreateScreenCaptureFromPath(addScreenshot(driver, scenarioContext)).Build());

}

else if (stepType == "When")

{

_scenario.CreateNode<When>(stepName).Fail(scenarioContext.TestError.Message,

MediaEntityBuilder.CreateScreenCaptureFromPath(addScreenshot(driver, scenarioContext)).Build());

}

else if (stepType == "Then")

{

_scenario.CreateNode<Then>(stepName).Fail(scenarioContext.TestError.Message,

MediaEntityBuilder.CreateScreenCaptureFromPath(addScreenshot(driver, scenarioContext)).Build());

}

else if (stepType == "And")

{

_scenario.CreateNode<And>(stepName).Fail(scenarioContext.TestError.Message,

MediaEntityBuilder.CreateScreenCaptureFromPath(addScreenshot(driver, scenarioContext)).Build());

}

}

}

}

}

This code is part of a test automation framework that integrates Extent Reports and uses hooks to manage the test flow. Let’s break down the code in simpler terms:

1. BeforeTestRun:

- This method runs before any tests start.

- It logs “Running before test run…” to the console.

- It also calls the ExtentReportInit() method to initialize the Extent Reports before running the tests.

2. AfterTestRun:

- This method runs after all the tests have finished.

- It logs “Running after test run…” to the console.

- It then calls ExtentReportTearDown() to finalize the Extent Reports and write all the results.

3. BeforeFeature:

- This method runs before each feature starts.

- It logs “Running before feature…” to the console.

- It creates a new test in the Extent Reports for the current feature (using the feature’s title).

4. AfterFeature:

- This method runs after each feature has finished.

- It logs “Running after feature…” to the console (you could add more logic here if needed).

5. AfterStep:

- This method runs after each step of the scenario (Given/When/Then/And).

- It logs “Running after step….” to the console.

- It captures the step type (Given/When/Then/And) and step name (text of the step).

Handling Passed and Failed Steps:

- If the scenario passes, it creates a node for the step in the report (using the step type like Given, When, Then, or And).

- If the scenario fails, it creates a node for the failed step and includes the error message along with a screenshot.

- For Passed Steps: If there is no error in the step (i.e., TestError == null), a node is created in the report for that step, and it is marked as passed.

- For Failed Steps: If there is an error in the step (i.e., TestError != null), the step is marked as failed, and the error message is added to the report. A screenshot of the failure is also captured and attached to the report.

Screenshot Handling:

The addScreenshot() method is used to capture and save screenshots of failed steps to aid in debugging. The screenshot is then added to the report along with the failure details.

In short, this code is designed to manage the reporting of each test step (whether it passes or fails) in Extent Reports, capturing screenshots of failed steps and adding them to the report for better visibility and debugging.

Once you have integrated the code into your project, simply run your feature tests through the Test Explorer. After the tests are executed, you can find the Extent report in the TestResults folder as an index.html file. We can then replicate both positive and negative test scenarios to examine the detailed results in the report.

Positive Test Results

In the positive test scenario, all the steps are executed successfully without any errors. The Extent report will show a green pass status for each step, indicating that the test ran smoothly and as expected. The report provides the following:

- Test Summary: A summary at the top will display the number of tests passed, failed, and skipped.

- Step Results: For each step (Given, When, Then, And), the report will show the status as Passed with a green checkmark.

- Detailed Logs: The logs for each step will be displayed, and no error message will be associated with passed steps.

Negative Test Results

In the negative test scenario, some steps fail during execution. The Extent report will clearly highlight the failed steps with a red error message. Here’s what you can expect:

- Test Failure Indicators: The failed steps will be marked with a red cross next to them. The error message for each failed step will be visible, showing the reason for failure.

- Screenshots of Failed Steps: We have configured the screenshot capture on failure, the report will display the screenshots of the failed steps. These screenshots will provide visual context to the error, making it easier to understand the failure point.

- Failure Logs: For each failed step, the error message will be displayed, and you can directly correlate it with the screenshot to understand what went wrong.

Overview of Extent Report UI

The Extent Report UI is interactive and structured to give you a detailed overview of the test execution. Here’s a breakdown of its main sections:

- Test Summary Section:

- Located at the top, this section shows an overall summary of your tests, including:

- Total Tests Run

- Tests Passed

- Tests Failed

- Tests Skipped

- Located at the top, this section shows an overall summary of your tests, including:

- Tests Section (Left-hand side):

- This section lists all the tests executed. You can see the individual test name, its status (pass, fail, skipped), and click to drill down into detailed logs and steps for each test.

- If a test fails, you can see the associated error message and screenshot (if attached).

- Dashboard Section (Left-hand side):

- This section provides a quick, high-level view of the test run with graphical charts showing:

- Pass/Fail Ratio: A pie chart or bar graph showing the percentage of tests that passed or failed.

- Trend Graphs: Line graphs showing trends of test pass/fail status over time.

- Test Summary: Summary information of the number of tests executed, passed, failed, and skipped.

- This section provides a quick, high-level view of the test run with graphical charts showing:

Integrating Extent Reports into your C# project enhances your test automation by providing visually appealing and highly detailed test execution reports. This structure helps both technical and non-technical team members understand the test execution status in a clear, interactive format. These reports make it easier for teams to track progress, debug failures, and share results with stakeholders.

Using AllureReports Integration

Allure Report is a flexible and powerful test reporting tool that provides clear, visually appealing, and interactive test execution results. It is widely used for its ability to integrate seamlessly with various testing frameworks, offering rich and informative reports that help teams better analyze their tests.

Here, we will explore how to integrate Allure Report into our C# project. We will walk through the setup process, configuration, and demonstrate how to generate Allure reports that provide insightful visualizations of your test execution, failures, and logs. This integration will significantly improve the way to track, share, and analyze the test results. Let’s dive into the to get Allure Report up and running with C#.

Steps to Integrate Allure Report in C#

To generate an Allure report, we first need to install Scoop on our local machine. We’ll follow the official Allure documentation to ensure seamless integration and report generation. To begin, open a PowerShell window and execute the following command:

Command : Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser

This command sets the execution policy required for Scoop installation.

Next, run the following command in the PowerShell window:

Command : irm get.scoop.sh | iex

This command initializes Scoop and downloads all the necessary packages required for its setup. Once completed, your system will be ready to proceed with the next steps for integrating Allure Report.

Finally, to install Allure, execute the following command in the PowerShell window:

Command : scoop install allure

This command installs Allure on your local machine, completing the setup process. Once installed, you can start using Allure to generate detailed and interactive test reports.

Next, navigate to your C# project, open the NuGet Package Manager, and search for the SpecFlow.Allure package. Install this package to integrate Allure with your SpecFlow tests, enabling the generation of detailed and interactive reports for your test executions.

After installing the SpecFlow.Allure NuGet package, the next step is to create a specflow.json file in your project. This file is used to configure SpecFlow and Allure integration settings.

Add the following code to the specflow.json file:

code :

{

"stepAssemblies": [

{

"assembly": "Allure.SpecFlowPlugin"

}

]

}

Place this file in the root directory of your project. It ensures SpecFlow and Allure are properly configured for generating enriched test execution reports.

Once the SpecFlow. Allure package is installed and the specflow.json file is created, you can proceed to run your feature tests from the Test Explorer. After executing the tests, navigate to the following directory to access the test output:

C:\Users\<YourUsername>\source\repos\<YourProjectName>\<YourProjectName>\bin\Debug\net6.0

Replace <YourUsername> and <YourProjectName> with your system’s username and project name, respectively. This directory contains the necessary files for generating the Allure report.

After navigating to the directory, open the command prompt and enter the command :

allure serve allure-results,

Then press Enter. The Allure report will open in your browser, displaying the test results.

In the Allure report, test results are displayed with clear distinctions between passed and failed tests:

- Passed Tests:

These tests are shown in green with a checkmark, indicating they ran successfully without issues. All related steps, logs, and additional details like screenshots (if configured) are displayed to help confirm the test’s success.

- Failed Tests:

When a test fails, Allure highlights the error message and provides detailed information, such as the stack trace, the failure point, and any attached screenshots or logs, helping identify the root cause of the failure.

These sections allow for easy visualization of test execution, making it straightforward to track test progress and identify issues quickly.

Integrating Allure Reports in C# gives an advanced, visually interactive way to track, debug, and share test results. It not only enhances test visibility but also makes the results more accessible and understandable for both technical and non-technical stakeholders.

By following the steps outlined above, we can easily set up Allure Reports for project and enjoy the benefits of rich, customizable reports that help improve test automation process.

Conclusion

In conclusion, test reporting is a crucial element of quality assurance in automation. In this blog, we’ve explored the importance of test reporting, how to set up a basic BDD framework in C#, and how to integrate powerful reporting tools such as LivingDoc, ExtentReports, and Allure Reports. The choice of the right tool depends on your team’s specific needs, but incorporating any of these will enhance testing efforts, offering improved communication, traceability, and actionable insights for projects.

We also delved into the differences between the tools:

- LivingDoc is ideal for teams focused on BDD who need a simple, feature-based report for non-technical stakeholders.

- ExtentReports is perfect for teams that require rich, interactive reports with detailed logs and media, making it great for comprehensive testing workflows.

- Allure is well-suited for teams seeking modern, visually engaging reports that can track test data over time and integrate seamlessly into CI/CD pipelines.

Witness how our meticulous approach and cutting-edge solutions elevated quality and performance to new heights. Begin your journey into the world of software testing excellence. To know more refer to Tools & Technologies & QA Services.

If you would like to learn more about the awesome services we provide, be sure to reach out.

Happy Testing 🙂